Tag: MoE

Why OpenAI’s New Open Weight Models Are a Big Deal

The smoke is still clearing from OpenAI’s big GPT-5 launch today, but the verdict is starting to come in on the company’s other big announcement this week: the launch of two new open weight models, gpt-oss-120b and g Read more…

Snowflake Touts Speed, Efficiency of New ‘Arctic’ LLM

Snowflake today took the wraps off Arctic, a new large language model (LLM) that is available under an Apache 2.0 license. The company says Arctic’s unique mixture-of-experts (MoE) architecture, combined with its relat Read more…

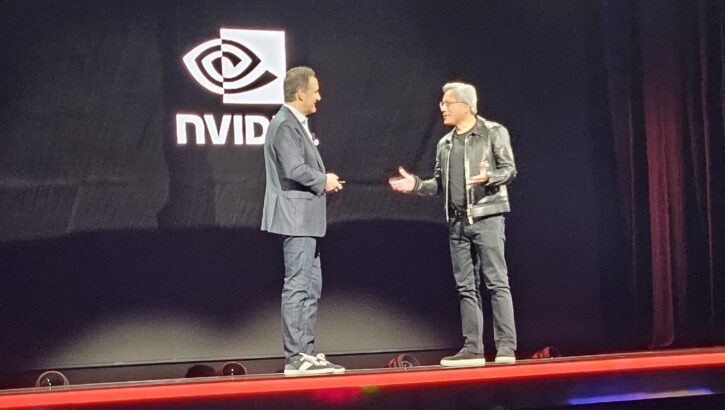

AWS Teases 65 Exaflop ‘Ultra-Cluster’ with Nvidia, Launches New Chips

AWS yesterday unveiled new EC2 instances geared toward tackling some of the fastest growing workloads, including AI training and big data analytics. During his re:Invent keynote, CEO Adam Selipsky also welcomed Nvidia fo Read more…

October 1, 2025

- Ai2 Launches Asta DataVoyager: Data-Driven Discovery and Analysis for Science

- SAS: New Study Shows Trust in GenAI Surges Globally Despite Gaps in AI Safeguards

- Fortanix and BigID Integration Automates Discovery, Classification and Protection of Sensitive Data

- Perforce 2025 State of Data Compliance Report Reveals Confusion Around AI Data Privacy

- ADDF’s SpeechDx Releases First Dataset and Partners with Callyope on AI Alzheimer’s Detection

- StorONE and Western Digital Deliver Scalable, Smart Storage for America’s Test Kitchen

September 30, 2025

- Dresner Advisory Services Publishes 2025 Financial Consolidation, Close Management, and Financial Reporting Market Study

- StreamNative Introduces Unified Platform for Real-Time AI with Ursa and Orca

- DataJoint Raises $4.9M Seed Round to Advance AI-Driven Data Management in Life Sciences

- Precisely Integrates Master Data Management with Data Governance to Power AI and Advanced Analytics

- SnapLogic and Antemia Partner to Advance Digital Lifecycle Integration for Engineering Enterprises

- Datawizz Raises $12.5M Seed Round to Make AI Efficient, Economical, and Sustainable

- Databricks Announces Data Intelligence for Cybersecurity

- LandingAI Introduces ADE DPT-2 for Improved Extraction from Text, Tables, and Visual Data

- MinIO Introduces Iceberg Tables in AIStor, Unifying Enterprise Data for AI

- Cerebras Systems Raises $1.1B Series G at $8.1B Valuation

September 29, 2025

- Esri Releases New Book Demonstrating How GeoAI Powers Greater Automation, Prediction, and Optimization

- SnapLogic Announces Integreat 2025 Tour: Bringing AI-Powered Integration to the Enterprise

- Couchbase Announces Appointment of BJ Schaknowski as CEO and Amir Jafari as CFO

September 26, 2025

- Inside Sibyl, Google’s Massively Parallel Machine Learning Platform

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- In Order to Scale AI with Confidence, Enterprise CTOs Must Unlock the Value of Unstructured Data

- What Are Reasoning Models and Why You Should Care

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- Beyond Words: Battle for Semantic Layer Supremacy Heats Up

- Meet Krishna Subramanian, a 2025 BigDATAwire Person to Watch

- What Is MosaicML, and Why Is Databricks Buying It For $1.3B?

- Why Does Building AI Feel Like Assembling IKEA Furniture?

- How to Make Data Work for What’s Next

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- The Top Five Data Labeling Firms According to Everest Group

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- AI Hype Cycle: Gartner Charts the Rise of Agents, ModelOps, Synthetic Data, and AI Engineering

- MIT Report Flags 95% GenAI Failure Rate, But Critics Say It Oversimplifies

- Promethium Wants to Make Self Service Data Work at AI Scale

- AI Agents Debut Atop Gartner 2025 Hype Cycle for Emerging Tech

- Sphinx Emerges with Copilot for Data Science

- Global DataSphere to Hit 175 Zettabytes by 2025, IDC Says

- Alation and Immuta in Data Access Hookup

- More News In Brief…

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- DataSnap Expands with AI-Enabled Embedded Analytics to Accelerate Growth for Modern Businesses

- Snowflake, Salesforce, dbt Labs, and More Launch Open Semantic Interchange Initiative to Standardize Data Semantics

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- Qlik Announces Canada Cloud Region to Empower Data Sovereignty and AI Innovation

- EY Announces Alliance with Boomi to Offer Broad Integrated Solutions and AI-Powered Transformation

- Google Cloud’s 2025 DORA Report Finds 90% of Developers Now Use AI in Daily Workflows

- Deloitte Survey Finds AI Use and Tech Investments Top Priorities for Private Companies in 2024

- Databricks Surpasses $4B Revenue Run-Rate, Exceeding $1B AI Revenue Run-Rate

- Precisely Unveils AI Agents and Copilot for the Data Integrity Suite

- More This Just In…