TPC25 Provides Glimpse at Future of AI-Powered Science

How will AI impact science? Nobody knows the full answer to that question, but last week’s Trillion Parameter Consortium conference, dubbed TPC25, provided tantalizing hints at what’s to come.

What could one scientist do with 1,000 AI agents at her beck and call, each with a human IQ equivalent of 140? That was the fascinating possibility posed by Argonne National Lab’s Rick Stevens, who is one of the principals of the Trillion Parameter Consortium.

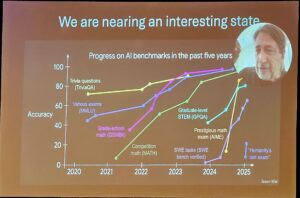

“We’re seeing, of course, dramatic improvement on benchmarks,” Stevens said at TPC25, which attracted 350 attendees to a San Jose hotel ballroom. “These are showing enormous progress.”

Stevens shared the results of multiple benchmarks demonstrating remarkable gains by AI across a range of disciplines, from math to trivia to coding. Stevens described to the audience how he took a single image of a table describing some complex chemical structures and asked OpenAI’s Deep Research model to analyze it.

“And 10 minutes later, I got an 18-page customized review article where the sole input, besides a couple lines of my prompt, was this picture,” Stevens said. “We’ve created systems that give us the opportunities to break through barriers of understanding or access to knowledge, and I think that’s really amazing in the current landscape.”

How science will harness this newfound capability, of course, is the big question. When Stevens and his HPC colleagues at RIKEN, Barcelona Supercomputing Center, and other organizations created TPC three years ago, the belief was that models would continue to get bigger and better, allowing scientists to really break through on truly tough scientific challenges. For instance, could you load all the scientific literature and laboratory results around classic physics and quantum physics, and then ask the model to describe a link between gravity and quantum field theory? Maybe it could create novel molecules to more efficiently convert carbon dioxide into ethanol? Could AI find a cure for cancer?

While the models have gotten bigger and better, they’re not in the 20 trillion to 30 trillion parameter range that Stevens and other TPC members postulated a few years ago that we would have by now. And as we’ve learned more about how foundation models work, the potential for earth-rattling scientific discoveries seems to have dimmed a bit. But in its place has come more pragmatic promises, such as using AI agents to accelerate the work of existing scientists–that is, to accelerate the current pace of science.

AI is already being adopted in scientific labs, and is showing the potential to turn a single human scientist into a “super scientist,” according to Satoshi Matsuoka, Director of the RIKEN Center for Computational Science (R-CCS).

“We want to change the way that we do science,” Matsuoka said during his TPC25 session last week. “We’re not going to just use AI as an edge tool…But rather want to use AI everywhere.”

The current path calls for utilizing the new class of reasoning AI models that have emerged over the past six months, instead of creating bigger models and training them on bigger datasets, Matsuoka said.

“Going from a trillion to 20 trillion would incur several thousand[fold] increase in computational performance, so unless you’re [Elon] Musk, you’re not going to be able to afford that,” Matsuoka said. Instead of “one big model to rule the world,” scientists would use “an ensemble of models, somewhat like experts, but where you have much more division of labor.”

Ian Foster, who is the director of Argonne’s Data Science and Learning Division, shared with the TPC25 crowd a benchmark result that demonstrated reasoning models have progressed rapidly over the past six months, from working no better than a human PhD holder outside of his specialty to functioning at the same level of a human PhD using Google search.

“They’re not just learning facts and structures in the world,” Foster said. “They’re also learning how to reason, and this is allowing these models to tackle these challenging problems.”

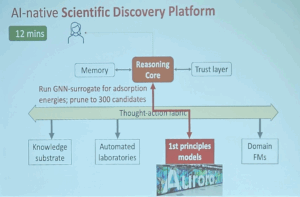

Foster proposed the creation of a “thought action fabric” that incorporates reasoning AI models to accelerate the scientific process. This fabric would help coordinate AI agents to handle multiple tasks, from creating hypothesis and setting up experiments, to running the experiments and analyzing the results.

We are now in the stage of figuring out how to build systems that could unleash a wave of scientific productivity, if not creativity, and there are lots of details to be worked out. One of the big ones is evaluating the reasoning chains created by AI models and figuring out how to improve them. If large sums are going to be spent building and running these systems, then controls need to be put in place, Foster said.

One possible side effect of improvements in science for AI is that it may result in the need for fewer human scientists, Foster said.

“I think historically, over the last 30 years, we’ve increased the speed of our computers by how many orders of magnitude, but we haven’t increased the number of scientists by any significant fraction,” he said. “And I think we’re going to see here similarly, we probably need to spend more money on resources, but because we’re going to perform more many more experiments, many more simulations, we probably are going to require fewer people. We’ll just tackle more and more complex problems.”

While AI may not be progressing toward ever-bigger foundation models, the emergence of reasoning models has maintained excitement at a high level for the folks at TPC and the AI for science community at large. There are still big challenges to overcome, ranging from mitigating hallucinations, the security of MCP, and where are we going to get more electricity to power all the data centers we’ll need to build?

But the bottom line is that never before has mankind had the ability to so easily convert electrical or mechanical power into cognitive labor. The potential results are tantalizing to think about, as Stevens summarized.

“It’s the question of our age, which is what is the relationship between people and scientists and academics in some sense, and this engine that just converts power into cognitive labor?” Stevens asked. “How do we work that relationship? What should that relationship be, and what should the goals of an engine able to produce such output be?

“That’s the question that I hope people are thinking about.”

Related Items:

Our Shared AI Future: Industry, Academia, and Government Come Together at TPC25

From Hypothesis to Hardware: Four Voices on the Future of Scientific AI at TPC25

Agentic AI Sets the Tone at TPC25’s Hackathon and Tutorial Plenary Session