(Ken Cook/Shutterstock)

Zoom upset customers when it failed to properly inform them it was using audio, video, and transcripts of calls to train its AI models. Are similar data pitfalls lurking for other software providers?

Zoom was forced into damage control mode earlier this month after customers became outraged that the company was harvesting their data without their knowledge. The uproar started on August 6, when Stack Diary published a story about the lack of consent for data collection in Zoom’s terms and conditions.

After a couple false starts, Zoom changed its terms to clearly state that it “does not use any of your audio, video, chat, screen sharing, attachments or other communications…to train Zoom or third-party artificial intelligence models.”

While your friendly neighborhood video call app may have backed down from its ambitious data harvesting and AI training project, it begs the question: What other software companies or software as a service (SaaS) providers are doing something similar?

SaaS Data Growth

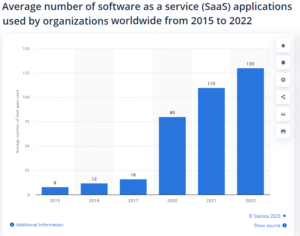

Companies like Salesforce, NetSuite, and ServiceNow grew their fledgling SaaS offerings for CRM, ERP, and service desk software into massive businesses generating billions of dollars from tens of thousands of companies. Microsoft, Oracle, SAP, and other enterprise software vendors followed suit, and today average company uses 130 different SaaS tools, according to Statista.

Consumer-focused Web giants like Google and Facebook faced scrutiny early on for their data collection and processing efforts. There was pushback when users of “free” services like Gmail and social media apps discovered that they were paying for the service with their data, i.e. that they were the product.

But business SaaS vendors largely stayed under the radar regarding collection and use of their customers’ data, says Shiva Nathan, the CEO and founder of Onymos, a provider of a privacy-enhanced Web application framework.

“SaaS was a great thing back in the early 2000s,” Nathan says. “When the pendulum swung from on-premise data centers to the cloud, people didn’t think two steps ahead to say that, oh along with the infrastructure, we also moved our data too.”

SaaS providers are sitting on a veritable goldmine of customer data, and they’re under pressure to monetize it, often using AI. SaaS providers will often state that they collect customer data for something generic, such as “to improve the service,” which sounds good, but they often will use it for analytics or to train an AI model that the customer isn’t directly benefiting from. That raises problematic questions.

“Enterprise always thought that I’m paying for this service, which means that my data is not going to be misused or used for some other purposes,” Nathan tells Datanami. “The customers are just now getting aware that their data is being used by someone that they did not think was going to use the data.”

Uncertain Terms

The big problem is that many SaaS providers don’t clearly state what they’re doing with the data, and use intentionally vague language in their customer agreements, Nathan says.

Zoom was caught red handed collecting audio, video, and transcripts of calls to build AI models without consent until it was called out on the practice. Even then, it took the company a couple tries before it spelled out its data collection instances in plain English.

Many other SaaS vendors have successfully avoided scrutiny regarding their data collection efforts up until this point, Nathan says. “People didn’t think that far ahead,” he says. “If you use Stripe, it gets all your financial data. If you use Octa, it gets all your authentication data.”

SaaS vendors are intentionally vague in their terms of service, which gives them plausible deniability, Nathan says. “Their attorneys are smart enough to write the contract in such a broad language that they’re not breaking the terms,” he says.

When a SaaS vendor uses its customers’ data to train machine learning models, it’s impossible to back that data out. Just as a child can’t unsee an unsettling TV show, an ML model can’t be turned back in time to forget what it’s learned, Nathan says.

Using this data to train AI models is a clear violation of GDPR and other privacy laws, Nathan says, because no consent was given in the first place, and there’s no way to pull the private data back out of the model. Things are exacerbated when other customers’ data is inadvertently collected.

“The whole Titanic-heading-to-the-iceberg moment is going to happen where the service providers have to go back and look at their customers’ data usage and most of the time it’s not their customer, it’s their customers’ customer,” he says. “We will have lawsuits on this soon.”

It’s worth noting that this dynamic has impacted OpenAI, maker of GPT-4 and ChatGPT. OpenAI CEO Sam Altman has repeatedly said OpenAI does not use any data sent to the company’s AI models to train the models. But many companies clearly are suspicious of that claim, and in turn are seeking to build their own large language models, which they will control by running in house or in their own cloud account.

Privacy as Feature

As businesses become savvier to the way their data is being monetized by SaaS vendors, they will begin to demand more options to opt out of any data sharing, or to receive some benefit. Just as Apple has made privacy a core feature of its iOS ecosystem, vendors will also learn that privacy has value in the market for B2B services, which will help steer the market.

Nathan is betting that his business model with Onymos–which provides a privacy-protected framework for hosting Web, mobile, and IoT applications–will resonate in the broader B2B SaaS ecosystem. Smaller vendors that are scraping for market share will either not collect any customer data or ensure that any sensitive data is obfuscated and anonymized before it makes its way into the model.

By putting businesses back in charge of their own data, it will not only protect the privacy of businesses and their customers, but lower the surface area for data-hungry hackers to attack–all while enabling customers to use AI on their own data, Nathan says.

“The fundamental paradigm that we are studying is that there doesn’t need to even be a [single] honeypot,” he says. “Honey can be strewn all around the place, and the algorithms can be run on the honey. It’s a different paradigm that we’re trying to push to the market.”

Unfortunately, this new paradigm won’t likely change the practices of the monopolists, who will continue to extract as much data from customers as possible, Nathan says.

“The most monopolistic SaaS provider would say, if you want to use my service, I get your data. You don’t have any other recourse,” he says. “It will make the larger operations that are powerful become even more powerful.”

Related Items:

Anger Builds Over Big Tech’s Big Data Abuses

Crazy Idea No. 46: Making Big Data Beneficial for All

Big Data Backlash: A Rights Movement Gains Steam

September 16, 2025

- MongoDB Launches AI-Powered Application Modernization Platform to Reduce Technical Debt and Speed Innovation

- UC San Diego Plays Key Role in National Effort to Build a Fusion Research Data Platform

- NVIDIA Welcomes Data Guardians Network to Its Elite Startup Program

- Acceldata Survey Finds Persistent Gaps in Enterprise AI Data Readiness

- Exabeam and DataBahn Partner to Accelerate AI-Powered Security Operations with Smarter Threat Detection

- Domino Data Lab Achieves ISO 9001 and SOC 2 Type II With Zero Findings

- Qlik Open Lakehouse Now Generally Available, Giving Enterprises Rapid, AI-Ready Data on Apache Iceberg

- Expert.ai and Springer Nature Partner to Transform Clinical Trials with AI-Driven Intelligence and Deep Domain Expertise

- Denodo Expands AI Capabilities with Release of DeepQuery in Platform 9.3

- Indicium Receives Investment from Databricks Ventures

- DataOps.live Launches Major Upgrade to Deliver AI-Ready Data at Enterprise Scale

- Ethernet Alliance to Showcase Multivendor Live Demonstration at ECOC 2025

- Allot Leverages SnapLogic to Launch AI Agent to Highlight Health Inequalities in Pharma

September 15, 2025

- Penn State Center for Social Data Analytics Now Accepting Grant Applications

- Pacific Wave, Cal Poly Humboldt, and Internet2 Collaborate on Connectivity to Singapore, Guam, and Jakarta

- Governing AI Starts with Giving Users Control Over Their Data

- KX and OneTick Merge to Unite Capital Markets Data, Analytics, AI and Surveillance on One Platform

- Snowflake Ranked by Fortune as #1 on Its Future 50 2025 List

- Exabeam and Cribl Partner to Power Scalable, High-Fidelity Threat Detection with Next-Gen Data Pipelines

September 11, 2025

- Inside Sibyl, Google’s Massively Parallel Machine Learning Platform

- What Are Reasoning Models and Why You Should Care

- Beyond Words: Battle for Semantic Layer Supremacy Heats Up

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- Software-Defined Storage: Your Hidden Superpower for AI, Data Modernization Success

- The AI Beatings Will Continue Until Data Improves

- What Is MosaicML, and Why Is Databricks Buying It For $1.3B?

- Why Metadata Is the New Interface Between IT and AI

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- How to Make Data Work for What’s Next

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- GigaOm Rates the Object Stores

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- Promethium Wants to Make Self Service Data Work at AI Scale

- Databricks Now Worth $100B. Will It Reach $1T?

- AI Hype Cycle: Gartner Charts the Rise of Agents, ModelOps, Synthetic Data, and AI Engineering

- MIT Report Flags 95% GenAI Failure Rate, But Critics Say It Oversimplifies

- The Top Five Data Labeling Firms According to Everest Group

- Data Prep Still Dominates Data Scientists’ Time, Survey Finds

- Career Notes for August 2025

- More News In Brief…

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- DataSnap Expands with AI-Enabled Embedded Analytics to Accelerate Growth for Modern Businesses

- Acceldata Announces General Availability of Agentic Data Management

- Transcend Expands ‘Do Not Train’ and Deep Deletion to Power Responsible AI at Scale for B2B AI Companies

- Pecan AI Brings Explainable AI Forecasting Directly to Business Teams

- SETI Institute Awards Davie Postdoctoral Fellowship for AI/ML-Driven Exoplanet Discovery

- NVIDIA: Industry Leaders Transform Enterprise Data Centers for the AI Era with RTX PRO Servers

- Hitachi Vantara Recognized by GigaOm, Adds S3 Table Functionality to Virtual Storage Platform One Object

- Ataccama Data Trust Assessment Reveals Data Quality Gaps Blocking AI and Compliance

- More This Just In…