Nvidia Jetson Orin

Amid the flood of news coming out of Nvidia’s GPU Technology Conference (GTC) today were pair of announcements aimed at accelerating the development of AI on the edge and enabling autonomous mobile robots, or AMRs.

First, let’s cover Nvidia’s supercomputer for edge AI, dubbed Jetson. The company today launched Jetson AGX Orin, its most powerful GPU-powered device designed for AI inferencing at the edge and for powering AI in embedded devices.

Armed with an Ampere-class Nvidia GPU, up to 12 Arm Cortex CPU, and up to 32 GB of RAM, Jetson AGX Orin is capable of delivering 275 trillion operations per second (TOPS) on INT8 workloads, which is more than an 8x boost compared to the previous top-end device, the Jetson AGX Xavier, Nvidia said.

Jetson AGX Orin is pin and software compatible to the Xavier model, so the 6,000 or so customers that have rolled out products with the AI processor in them, including John Deere, Medtronic, and Cisco, can basically just plug the new device into the solutions they have been developing over the past three or four years, said Deepu Talla, Nvidia’s vice president of embedded and edge computing.

The developer kit for Jetson AGX Orin will be available this week at a starting price of $1,999, enabling users to get started with developing solutions for the new offering. Delivery of production-level Jetson AGX Orin devices will start in the fourth quarter, and the units will start at $399.

Recent developments at Nvidia will accelerate the creation of AI applications, Talla said.

“Until a year or two ago, very few companies could build these AI products, because creating an AI model has actually been very difficult,” he said. “We’ve heard it takes months if not a year-plus in some cases, and then it’s…a continuous iterative process. You’re not done ever with the AI model.”

However, Nvidia has been able to reduce that time considerably by doing three things, Talla said.

The first one is including pre-trained models for both computer vision and conversational AI. The second is the ability to generate synthetic data on its new Omniverse platform. Lastly, transfer learning gives Nvidia customers the ability to take those pre-trained models and customize them to a customer’s exact specifications by training with “both physical real data and synthetic data,” he said.

“We are seeing tremendous amount of adoption because just make it so easy to create AI bots,” Talla said.

Nvidia is developing simulation tools to help developers create AMRs that can navigage complex real-world environments (Image courtesy Nvidia)

Nvidia also announced the release of Isaac Nova Orin, is a reference platform for developing AMRs trained with the company’s AI tech.

The platform combines two of the new Jetson AGX Orin discussed above, giving it 550 TOPS of compute capacity, along with additional hardware, software, and simulation capabilities to enable developers to create AMRs that work in specific locations. Isaac Nova Orin also will be outfitted with a slew of sensors, including regular cameras, radar, lidar, and ultrasonic sensors to detect physical objects in the real world.

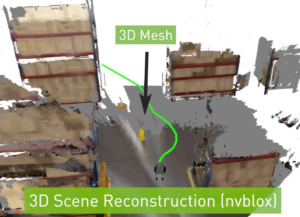

Nvidia will also ship new software and simulation capabilities to accelerate AMR deployments. A key element there is another offering called Isaac Sim on Omniverse, which will enable developers to leverage virtual 3D building blocks that simulate complex warehouse environments. The developer will then train and validate a virtual version of the AMR to navigate that environment.

The opportunity for AMRs is substantial across multiple industries, including warehousing, logistics, manufacturing, healthcare, retail, and hospitality. Nvidia says research from ABI Research forecasts the market for AMRs to grow from under $8 billion in 2021 to more than $46 billion by 2030.

“The old method of designing the AMR compute and sensor stack from the ground up is too costly in time and effort, says Nvidia Senior Product Marketing Manager Gerard Andrews in an Nvidia blog post today. “Tapping into an existing platform allows manufacturers to focus on building the right software stack for the right robot application.

Related Items:

Models Trained to Keep the Trains Running

Nvidia’s Enterprise AI Software Now GA

Nvidia Inference Engine Keeps BERT Latency Within a Millisecond

September 16, 2025

- MongoDB Launches AI-Powered Application Modernization Platform to Reduce Technical Debt and Speed Innovation

- UC San Diego Plays Key Role in National Effort to Build a Fusion Research Data Platform

- NVIDIA Welcomes Data Guardians Network to Its Elite Startup Program

- Acceldata Survey Finds Persistent Gaps in Enterprise AI Data Readiness

- Exabeam and DataBahn Partner to Accelerate AI-Powered Security Operations with Smarter Threat Detection

- Domino Data Lab Achieves ISO 9001 and SOC 2 Type II With Zero Findings

- Qlik Open Lakehouse Now Generally Available, Giving Enterprises Rapid, AI-Ready Data on Apache Iceberg

- Expert.ai and Springer Nature Partner to Transform Clinical Trials with AI-Driven Intelligence and Deep Domain Expertise

- Denodo Expands AI Capabilities with Release of DeepQuery in Platform 9.3

- Indicium Receives Investment from Databricks Ventures

- DataOps.live Launches Major Upgrade to Deliver AI-Ready Data at Enterprise Scale

- Ethernet Alliance to Showcase Multivendor Live Demonstration at ECOC 2025

- Allot Leverages SnapLogic to Launch AI Agent to Highlight Health Inequalities in Pharma

September 15, 2025

- Penn State Center for Social Data Analytics Now Accepting Grant Applications

- Pacific Wave, Cal Poly Humboldt, and Internet2 Collaborate on Connectivity to Singapore, Guam, and Jakarta

- Governing AI Starts with Giving Users Control Over Their Data

- KX and OneTick Merge to Unite Capital Markets Data, Analytics, AI and Surveillance on One Platform

- Snowflake Ranked by Fortune as #1 on Its Future 50 2025 List

- Exabeam and Cribl Partner to Power Scalable, High-Fidelity Threat Detection with Next-Gen Data Pipelines

September 11, 2025

- Inside Sibyl, Google’s Massively Parallel Machine Learning Platform

- What Are Reasoning Models and Why You Should Care

- Beyond Words: Battle for Semantic Layer Supremacy Heats Up

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- Software-Defined Storage: Your Hidden Superpower for AI, Data Modernization Success

- The AI Beatings Will Continue Until Data Improves

- What Is MosaicML, and Why Is Databricks Buying It For $1.3B?

- Why Metadata Is the New Interface Between IT and AI

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- How to Make Data Work for What’s Next

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- GigaOm Rates the Object Stores

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- Promethium Wants to Make Self Service Data Work at AI Scale

- Databricks Now Worth $100B. Will It Reach $1T?

- AI Hype Cycle: Gartner Charts the Rise of Agents, ModelOps, Synthetic Data, and AI Engineering

- MIT Report Flags 95% GenAI Failure Rate, But Critics Say It Oversimplifies

- The Top Five Data Labeling Firms According to Everest Group

- Data Prep Still Dominates Data Scientists’ Time, Survey Finds

- Career Notes for August 2025

- More News In Brief…

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- DataSnap Expands with AI-Enabled Embedded Analytics to Accelerate Growth for Modern Businesses

- Acceldata Announces General Availability of Agentic Data Management

- Transcend Expands ‘Do Not Train’ and Deep Deletion to Power Responsible AI at Scale for B2B AI Companies

- Pecan AI Brings Explainable AI Forecasting Directly to Business Teams

- SETI Institute Awards Davie Postdoctoral Fellowship for AI/ML-Driven Exoplanet Discovery

- NVIDIA: Industry Leaders Transform Enterprise Data Centers for the AI Era with RTX PRO Servers

- Hitachi Vantara Recognized by GigaOm, Adds S3 Table Functionality to Virtual Storage Platform One Object

- Ataccama Data Trust Assessment Reveals Data Quality Gaps Blocking AI and Compliance

- More This Just In…