AWS Announces Support for PyTorch with Amazon Elastic Inference

AWS has announced that the Amazon Elastic Inference is now compatible with PyTorch models. PyTorch, which AWS describes as a “popular deep learning framework that uses dynamic computational graphs,” is a piece of free, open-source software developed largely by Facebook’s AI Research Lab (FAIR) that allows developers to more easily apply Python code for deep learning. With Amazon’s announcement, PyTorch can now work with Amazon’s SageMaker and EC2 cloud services. PyTorch is the third major deep learning framework to be supported by Amazon Elastic Inference, following in the footsteps of TensorFlow and Apache MXNet.

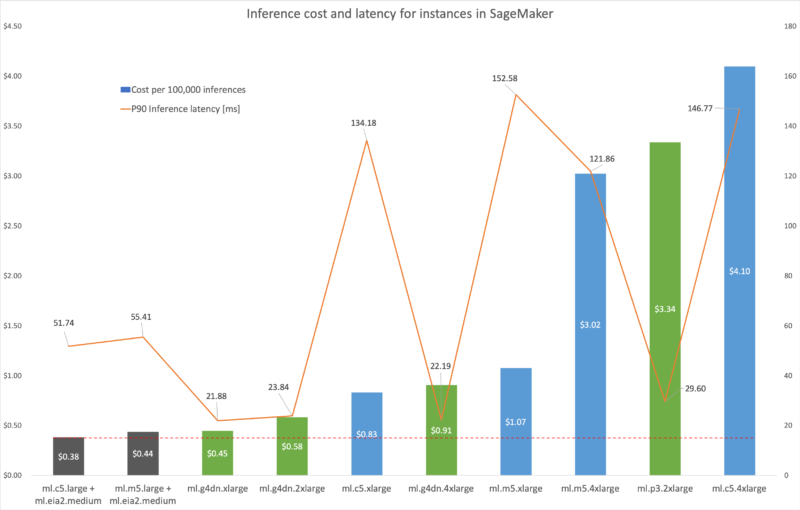

Inference – making actual predictions with a trained model – is a computing power-intensive process, accounting for up to 90% of PyTorch models’ total compute costs according to AWS. Instance selection is, therefore, important for optimization. “Optimizing for one of these resources on a standalone GPU instance usually leads to under-utilization of other resources,” wrote David Fan (a software engineer with AWS AI) and Srinivas Hanabe (a principal product manager with AWS AI for Elastic Inference) in the AWS announcement blog. “Therefore, you might pay for unused resources.”

The duo argue that Amazon Elastic Inference solves this problem for PyTorch by allowing users to select the most appropriate CPU instance in AWS and separately select the appropriate amount of GPU-based inference acceleration.

In order to use PyTorch with Elastic Inference, developers must convert their models to TorchScript. “PyTorch’s use of dynamic computational graphs greatly simplifies the model development process,” Fan and Hanabe wrote. “However, this paradigm presents unique challenges for production model deployment. In a production context, it is beneficial to have a static graph representation of the model.”

To that end, they said, TorchScript bridges the gap by allowing users to compile and export their models into a graph-based form. In the blog, the authors provide step-by-step guides for using PyTorch with Amazon Elastic Inference, including conversion to TorchScript, instance selection, and more. They also discuss cost and latency among cloud deep learning platforms, highlighting how Elastic Inference’s hybrid approach offers “the best of both worlds” by combining the advantages of CPUs and GPUs without the drawbacks of standalone instances. To that end, they presented a bar chart comparing cost-per-inference and latency across Elastic Inference models (gray), models run on standalone GPU instances (green), and models run on standalone CPU instances (blue).

“Amazon Elastic Inference is a low-cost and flexible solution for PyTorch inference workloads on Amazon SageMaker,” they concluded. “You can get GPU-like inference acceleration and remain more cost-effective than both standalone Amazon SageMaker GPU and CPU instances, by attaching Elastic Inference accelerators to an Amazon SageMaker instance.”

To read AWS’ full blog post, click here.