Hate Hadoop? Then You’re Doing It Wrong

(mw2st/Shutterstock)

There’s been a lot of Monday morning quarterbacking saying Hadoop never made sense in the first place and that it has met its demise. Comments from “Hadoop is slow for small, ad hoc jobs” to “Hadoop is dead and Spark is the victor” are now commonplace.

The frenzy around Hadoop’s deficiencies is almost as fierce as the initial hype around how powerful and disruptive it was. However, while it’s understandable that people have come up against difficulties deploying Hadoop, that doesn’t mean the negative chatter is true.

Out of Sight

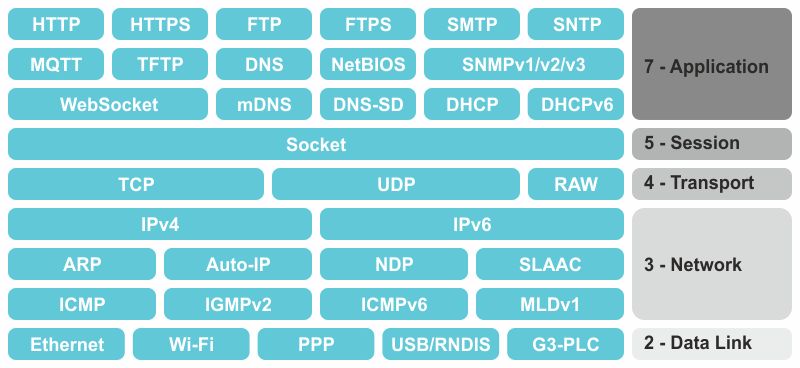

TCP/IP powers the Internet, your email, your apps and more. But chances are you don’t hear about it. When you request a ride sharing service, stream media or surf the Internet, you’re benefitting from its power.

For the most part, we all rely on TCP/IP on a daily basis, yet have no interest or need to configure it. We don’t spend time going on our Macs typing commands like ifconfig to see how your WiFi adapter is configured to get online.

The complexity of the TCP/IP stack is mostly invisible to us now, as Hadoop’s complexity will eventually be

In the 1990s, TCP/IP used to be sold as a product, and adoption was somewhat tepid. Eventually, TCP/IP got built into operating systems and, perhaps paradoxically, that’s when it conquered all. It became a universal standard, and at the same time it disappeared from plain sight.

Hadoop Is Infrastructure

Similarly, Hadoop is the TCP/IP of the Big Data world. It’s the infrastructure that delivers huge benefits. But that benefit is greatly diluted when the infrastructure is exposed. Hadoop has been marketed like a Web browser, but it’s much more like TCP/IP.

If you’re working with Hadoop directly, you’re doing it wrong. If you’re typing “Hadoop” and a bunch of parameters at the command line, you’ve got it all backwards. Do you want to configure and run everything yourself, or do you just want to work with your data and let analytics software handle Hadoop on the back end?

Most people would choose the latter, but the Big Data industry often directed customers to the former. The industry did it with Hadoop before, and they’re doing it with Spark and numerous machine-learning tools now.

It’s a case of the technologist tail wagging the business user dog, and that never ends well.

Dev Tools Aren’t Biz Tools

It’s not that the industry has been totally oblivious to this problem. Some vendors have tried to up their tooling game and smooth out Hadoop’s rough edges. Open source projects with names like Hue, Jupyter, Zeppelin and Ambari have cropped up, aiming to get Hadoop practitioners off the command line.

Getting Hadoop users off the command line doesn’t necessarily mean they’re more productive

But therein lies the problem. We need tools for business users, not Hadoop practitioners. Hue is great for running and tracking Hadoop execution jobs or for writing queries in SQL or other languages. Jupyter and Zeppelin are great for writing and running code against Spark, in data science-friendly languages like R and Python, and even rendering the data visualizations that code produces.

The problem is these tools don’t get rid of command line tasks; they just make people more efficient at doing them. Getting people physically off the command line may be helpful, but having them do the same stuff, even if it’s easier, doesn’t really change the equation.

There’s a balancing act here. To do Big Data analytics right, you shouldn’t have to use the engine – Hadoop in this case – directly, but you still want its full power. To make that happen, you need an analytics tool that tames the technology, without dismissing it or shooing it away.

Find that middle ground and you’ll be on the right track.

The Path Forward for Hadoop

Hadoop isn’t dead, nor is it the problem. Hadoop is an extremely powerful, critical technology. But it’s also infrastructure. It never should have been the poster child for Big Data. Hadoop (and Spark, for that matter) is technology that should be embedded in other technologies and products. That way those technologies can, in turn, leverage their power, without exposing their complexity.

Hadoop’s not anymore dead than TCP/IP. The problem is how people have used it, not what it does. If you want to do big data analytics right, then exploit its power behind the scenes, where Hadoop belongs. If you do it that way, Hadoop will be resurrected, not by magic, but by common sense.

But you probably won’t even notice. And if that’s the case, then you’re doing it right.

About the author: Andrew Brust is Senior Director, Market Strategy and Intelligence at Datameer, liaising between the Marketing, Product and Product Management teams, and the big data analytics community. And writes a blog for ZDNet called “Big on Data” (zdnet.com/blog/big-data); is an advisor to NYTECH, the New York Technology Council; serves as Microsoft Regional Director and MVP; and writes the Redmond Review column for VisualStudioMagazine.com.

Related Items:

Hadoop Has Failed Us, Tech Experts Say

Hadoop at Strata: Not Exactly ‘Failure,’ But It Is Complicated

Charting a Course Out of the Big Data Doldrums

September 16, 2025

- MongoDB Launches AI-Powered Application Modernization Platform to Reduce Technical Debt and Speed Innovation

- UC San Diego Plays Key Role in National Effort to Build a Fusion Research Data Platform

- NVIDIA Welcomes Data Guardians Network to Its Elite Startup Program

- Acceldata Survey Finds Persistent Gaps in Enterprise AI Data Readiness

- Exabeam and DataBahn Partner to Accelerate AI-Powered Security Operations with Smarter Threat Detection

- Domino Data Lab Achieves ISO 9001 and SOC 2 Type II With Zero Findings

- Qlik Open Lakehouse Now Generally Available, Giving Enterprises Rapid, AI-Ready Data on Apache Iceberg

- Expert.ai and Springer Nature Partner to Transform Clinical Trials with AI-Driven Intelligence and Deep Domain Expertise

- Denodo Expands AI Capabilities with Release of DeepQuery in Platform 9.3

- Indicium Receives Investment from Databricks Ventures

- DataOps.live Launches Major Upgrade to Deliver AI-Ready Data at Enterprise Scale

- Ethernet Alliance to Showcase Multivendor Live Demonstration at ECOC 2025

- Allot Leverages SnapLogic to Launch AI Agent to Highlight Health Inequalities in Pharma

September 15, 2025

- Penn State Center for Social Data Analytics Now Accepting Grant Applications

- Pacific Wave, Cal Poly Humboldt, and Internet2 Collaborate on Connectivity to Singapore, Guam, and Jakarta

- Governing AI Starts with Giving Users Control Over Their Data

- KX and OneTick Merge to Unite Capital Markets Data, Analytics, AI and Surveillance on One Platform

- Snowflake Ranked by Fortune as #1 on Its Future 50 2025 List

- Exabeam and Cribl Partner to Power Scalable, High-Fidelity Threat Detection with Next-Gen Data Pipelines

September 11, 2025

- Inside Sibyl, Google’s Massively Parallel Machine Learning Platform

- What Are Reasoning Models and Why You Should Care

- Beyond Words: Battle for Semantic Layer Supremacy Heats Up

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- Software-Defined Storage: Your Hidden Superpower for AI, Data Modernization Success

- The AI Beatings Will Continue Until Data Improves

- What Is MosaicML, and Why Is Databricks Buying It For $1.3B?

- Why Metadata Is the New Interface Between IT and AI

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- How to Make Data Work for What’s Next

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- GigaOm Rates the Object Stores

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- Promethium Wants to Make Self Service Data Work at AI Scale

- Databricks Now Worth $100B. Will It Reach $1T?

- AI Hype Cycle: Gartner Charts the Rise of Agents, ModelOps, Synthetic Data, and AI Engineering

- MIT Report Flags 95% GenAI Failure Rate, But Critics Say It Oversimplifies

- The Top Five Data Labeling Firms According to Everest Group

- Data Prep Still Dominates Data Scientists’ Time, Survey Finds

- Career Notes for August 2025

- More News In Brief…

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- DataSnap Expands with AI-Enabled Embedded Analytics to Accelerate Growth for Modern Businesses

- Acceldata Announces General Availability of Agentic Data Management

- Transcend Expands ‘Do Not Train’ and Deep Deletion to Power Responsible AI at Scale for B2B AI Companies

- Pecan AI Brings Explainable AI Forecasting Directly to Business Teams

- SETI Institute Awards Davie Postdoctoral Fellowship for AI/ML-Driven Exoplanet Discovery

- NVIDIA: Industry Leaders Transform Enterprise Data Centers for the AI Era with RTX PRO Servers

- Hitachi Vantara Recognized by GigaOm, Adds S3 Table Functionality to Virtual Storage Platform One Object

- Ataccama Data Trust Assessment Reveals Data Quality Gaps Blocking AI and Compliance

- More This Just In…