Happy Birthday, Hadoop: Celebrating 10 Years of Improbable Growth

It’s hard to believe, but the first Hadoop cluster went into production at Yahoo 10 years ago today. What began as an experiment in distributed computing for an Internet search engine has turned into a global phenomenon and a focal point for a big data ecosystem driving billions in spending. Here are some thoughts on the big yellow elephant’s milestone from the people involved in Hadoop’s early days.

Hadoop’s story started before January 2006, of course. In the early 2000s, Doug Cutting, who created the Apache Lucene search engine, was working with Mike Cafarella to build a more scalable Web crawler called Nutch. They found inspiration in the Google File System white paper, which Cutting and Cafarella used as a model. Cutting and Cafarella built the Nutch Distributed File System in 2004, and then built a MapReduce framework to sit atop it a year later.

Doug Cutting named Hadoop after his son’s yellow toy elephant

The software was promising, says Cutting, who is now the chief architect at Cloudera, but they needed some outside support. “I was worried that, if the two of us working on it then, Mike Cafarella & I, didn’t get substantial help, then the entire effort might fizzle and be forgotten,” Cutting tells Datanami via email. “We found help in Yahoo, who I started working for in early 2006. Yahoo dedicated a large team to Hadoop and, after a year or so of investment, we at last had a system that was broadly usable.”

On January 28, 2006, the first Nutch (as it was then known) cluster went live at Yahoo. Sean Suchter ran the Web search engineering team at Yahoo and was the first alpha user for the technology that would become Hadoop. Suchter, who is the founder and CEO of Hadoop performance management tool provider Pepperdata, remembers those early days.

“That inaugural year in 2006, the Hadoop cluster started life with a mere 10 nodes–and we were thrilled if Hadoop ran continuously for a full day,” Suchter tells Datanami. “My team had huge volumes of Web crawls and click data to crunch and we threw it all at Hadoop, looking to leverage that data to improve our paid search results, which accounted for half of Yahoo’s revenue at the time.

Sean Suchter was an alpha tester for Hadoop at Yahoo

“One day,” Suchter continues, “something unexpected happened: Without any warning, the entire organic search functionality of Yahoo’s website suddenly went offline. We literally ran down two flights of stairs to the 6th floor of Yahoo’s Mission College campus, where the Hadoop team sat, barged into their offices, and frantically asked them to stop whatever it was they were doing. We didn’t know what had happened; all we knew was they had to turn Hadoop off NOW.”

The post-mortem would look familiar to many Hadoop operators today, Suchter says: a Yahoo engineer had decided to run a seemingly innocuous job on the cluster, but it inadvertently sucked up all the available network bandwidth, crippling Yahoo’s organic search.

“For a period of almost 20 minutes we were only delivering paid search results, which was great for revenue but bad for customer experience,” Suchter says. “After that there was a very long meeting of about 40 people to discuss exactly what had occurred and how to make sure it never happened again.”

Raymie Stata was on the Nutch board before Yahoo adopted Hadoop

Things improved from there, but it still took a lot of hard work by Yahoo engineers. “I remember in those years we just constantly wanted grow the number of nodes,” says Raymie Stata, who was vice president and chief architect of search and advertising at Yahoo and sat on the Nutch board. “We were all a little bit surprised how, even when you added 15 or 25 percent more nodes–let alone doubled the size of the cluster–unsurprising things would break and you’d have to get in and fix it.”

But Yahoo preserved, and eventually the Hadoop cluster reached 1,000 nodes, which Stata says was a significant milestone and helped justify moving a new and untested architecture investment. “Getting people to move from their existing homegrown well-loved, mature software to something that at the time was new, unproven, in many ways less mature, was a big organizational challenge,” says Stata, who is CEO and founder of Altiscale, a Hadoop as a service provider. “There were a bunch of people…who said ‘Let’s take a risk here.’ In retrospect, we’re all glad we did. It worked out great.”

The initial success of Hadoop helped Yahoo competitively, says Eric Baldeschwieler, who was Yahoo’s vice president for Hadoop engineering. “We were in head to head competition with Microsoft and Google who had built proprietary versions of systems similar to Hadoop, and we saw very little advantage in building a third proprietary system because it would be hard to get any notice for doing that,” Baldeschwieler tells Datanami.

Hadoop exceeded Eric Baldeschwieler’s wildest expectations

“Given that we were in a war for talent, not just for systems engineers but even more so for scientists who wanted to work on big data problems in search and advertising, we wanted to do something that would get their attention,” says Baldeschwieler, who would become the founding CEO of Hortonworks. “So the theory was that if we did this in open source, that would have a lot more impact than writing another paper [and] people could train on it in school and they would come out not only ready to work for us because they were familiar with our tool but also because they were familiar with the fact that Yahoo was a company that worked on big data.”

That’s not to say Hadoop, which would become a top-level project at the Apache Software Foundation, didn’t have its doubters within Yahoo. “My first thought was, who writes system software in Java?!’ says Arun Murthy, who was an architect in Yahoo’s Hadoop Map-Reduce team. Murthy eventually conceded that Hadoop’s open source approach was better than a competing proprietary approach written in C–even if Hadoop was written in Java.

“…Yahoo decided to abandon the proprietary project because everyone knew the open source community would outpace that project,” says Murthy, who would go on to be a co-founder and architect at Hortonworks. “Hadoop needed a lot of work back in those early days. When we started on Hadoop at Yahoo, it worked on barely two to three machines. We invested a lot in improving Hadoop, so much that at one point we had 100-plus people working on it.”

Arun Murthy couldn’t believe Hadoop was written in Java

Eventually, the other Web giants in the Silicon Valley saw what Yahoo was doing, and they wanted a part of it, too. “A huge investment was made over an extended period of time in making the Hadoop implementation of MapReduce extremely scalable,” Altiscale’s Stata says. “The Hadoop implementation [of MapReduce] made it a framework that was impossible to resist. EBay picked it up then Facebook picked it up then Twitter picked it up. They all picked it up because it had that horizontal scalability.”

Ashish Thusoo was at Facebook when Cutting, Suchter, Stata, and others put Hadoop it into production. “Needless to say, those early days were very different from where Hadoop is today,” says Thusoo, who would go on to co-found Hadoop as a service firm Qubole. “The whole team of committers could fit in a conference room. At the time, Hadoop was still only used by search teams.”

But Thusoo saw the potential in Hadoop as a foundation for data warehousing and a replacement for the expensive options that vendors were promoting at the time. “We were very intrigued by it,” he says. “Also, the architecture behind it was very simple and a lot of us had read or heard from some colleagues about how that architecture had been very successful at Google. When we started comparing the scalability and the open source benefits with commercial software for data processing available at that time, we were convinced of its potential in disrupting the data industry.”

![ashishthusoo[1]](https://www.bigdatawire.com/wp-content/uploads/2015/08/ashishthusoo1.jpg)

At Facebook, Ashish Thusoo created a SQL interface for Hadoop called Hive

Hadoop’s star was beginning to shine by 2007, when the first official Hadoop meetup took place at a bar in the Bay Area. Ted Dunning, who would later become the chief applications architect at MapR Technologies, recalls he volunteered to buy the beer.

“We had 35 people, Doug, myself, and other folks from Sun and Oracle. It was pretty good group,” Dunning tells Datanami. “Even though Ellen [Friedman], my co-author and wife, and I were walking around with pitchers of beer trying to pour more beer into people’s glasses and handing out appetizers, they would forget to drink and eat, they were so into the topic. That was one of the earliest indicators to me that it was going to be hot.”

Nevertheless, nobody thought that Hadoop would take off like it did, including Cutting. “I thought that if we could get Hadoop to be relatively stable & reliable then engineers at Web companies and researchers at universities would use it, that it would be a successful open source project,” Cutting says. “What I didn’t predict was that mainstream enterprises would be willing to adopt open-source software. The folks who founded Cloudera saw that possibility and began the steps to make Hadoop palatable to enterprises. I joined them a year later as I began to see the magnitude of this possibility.”

Ted Dunning was expecting to spend way more than $140 on beer and snacks at the first Hadoop meetup

Stata echoes that sentiment. “It far surpassed our expectations in terms of its impact and the level of investment, frankly, that it’s received,” he says. “We were obviously optimistic that we would have a successful open source project on our hands and that it would become reasonably widely used in the Internet community. We were expecting and hoping that the Internet companies would pick it up. But I think we never anticipated that it would become as big as it’s become and of interest to such a broad community.”

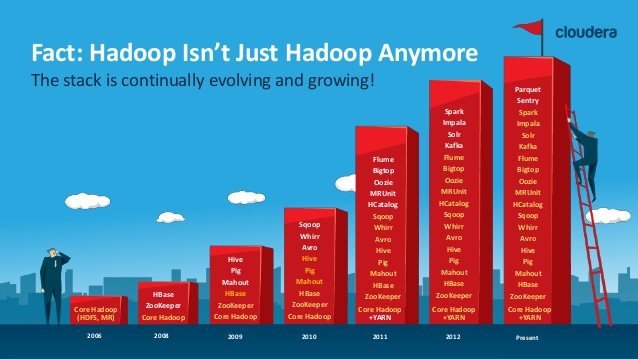

Eventually, it became evident that Hadoop’s impact would be felt far beyond having just a more scalable Internet crawler. While Apache Hadoop started as just two components—HDFS and MapReduce—it quickly grew and today there are several dozen Apache projects directly linked to Hadoop and included in most Hadoop distributions, including Pig, Oozie, Zookeeper, Hive, HBase, Spark and others. And outside this core, the ecosystem of applications is even bigger and broader.

“Hadoop 10 years ago was ground zero,” says Jack Norris, an IT industry veteran who is now chief marketing officer at MapR. “This is unique in that it’s still early days of this technology. There’s no 30-year history that we’re looking back on as we develop these open source capabilities. That’s why this…is such a robust and dynamic ecosystem.”

“Early on we thought it could be similar to the Apache HTTP Server Project in some ways,” says Hortonworks’ Murthy, “but Hadoop has become much larger. Many enterprises–financial institutions, retailers, insurers, etc.–are basing their data strategy on Hadoop. The pace of innovation in the community has been mind-blowing. Projects like Spark are gaining so much momentum, and the sheer number of projects has been fun to watch.”

The Hadoop ecosystem has grown quickly

Two factors gave Hadoop a big tailwind that would propel it into IT stardom: an open source license, and the building tidal wave of big data. “Open source software has a huge advantage,” Cutting says. “Users will much more readily try software that’s unencumbered by commercial licenses. When they find it nearly sufficient, some get involved in its development, improving it and increasing its rate of progress.

“Second,” Cutting continues, “our timing was also near perfect. As digital devices have become more widespread, from phones and desktop computers to cash registers and bar code scanners, most businesses have begun a digital transformation, with digital systems involved in almost every aspect of their operation. Hadoop lets such firms capture and benefit from much more of this data than before. Hadoop arrived right when businesses were ready to take advantage of it.”

It was fortuitous that Hadoop landed on the scene when it did, but Hadoop’s presence also helped accelerate data generation, says Stata. “Hadoop enabled a rapid development of a big data industry, but also benefited from these data generation trends, which in turn necessitated a big data industry,” he says. “It’s impossible at this point to separate Hadoop from the big data explosion. It was significant because it allowed that to happen.”

A simple and elegant design gave the pachyderm one more ace up its open source sleeve: commodity pricing. MapR’s Dunning recalls challenging two developers to build a recommendation engine. One was an Oracle pro with a quarter-million-dollar machine at his disposal, while the other had some scripting skills and a small Hadoop cluster.

“It took [the Oracle guy] several weeks to get something running efficiently enough, whereas the other guy, with a little scripting background, took about a week to get it running on Hadoop on less than $10,000 of hardware,” Dunning says. “It ran just as fast as the thing that took six times longer to develop on Oracle. So it was very clear there were at least niche applications where we could do amazing things.”

It’s tough to forecast where Hadoop will be 10 years from now. Will big data and distributed computing still be at the cutting edge, or become just more tools in the chest? How will we develop software? Will we still be calling it Hadoop?

It’s tough to forecast where Hadoop will be 10 years from now. Will big data and distributed computing still be at the cutting edge, or become just more tools in the chest? How will we develop software? Will we still be calling it Hadoop?

Cutting is bullish on the architecture he named after his son’s stuffed toy elephant. “Hadoop & its ecosystem components will continue to evolve and improve,” he says. “In recent years the ecosystem has added new execution engines like Spark and new storage systems like Kudu. That such fundamental advances in functionality are possible is an advantage of this ecosystem’s architecture: a loose confederation of open source projects. I can’t predict what the next new component will be, but there will definitely be more great stuff.”

Murthy says Hadoop has redefined what’s possible with data. “As you look ahead it’s getting cheaper and cheaper to store and process data,” he says. “This combination is leading to a lot of new business use cases for the enterprise, and Hadoop is part of it.”

Stata predicts the name Hadoop will continue to be used. “How the Hadoop project specifically matures over the 10 years–what happens to HDFS or YARN–that’s harder to predict,” he says. “But I think the Hadoop ecosystem will be alive and well, and those of us who worked on it 10 years ago will probably look at this and say, ‘I know why that’s the way it is. It might seem broken. But 20 years ago, here’s why we did it.’ That influence will still be there.”

Related Items:

From Spiders to Elephants: The History of Hadoop

Beyond the 3 Vs: Where Is Big Data Now?

HDP 2.0: Rise of the Hadoop Data Lake