Yahoo is in the process of implementing a big data tool called Druid to power high-speed real-time queries against its massive Hadoop-based data lake. Engineers at the Web giant say the open source database’s combination of speed and usability on fast-moving data make it ideal for the job.

Druid is a column-oriented in-memory OLAP data store that was originally developed more than four years ago by the folks at Metamarkets, a developer of programmatic advertising solutions. The company was struggling to keep the Web-based analytic consoles it provides customers fed with the latest clickstream data using relational tools like Greenplum and NoSQL databases like HBase, so it developed its own distributed database instead.

The core design parameter for Druid was being able to compute drill-downs and roll-ups over a large set of “high dimensional” data comprising billions of events, and to do so in real time, Druid creator, Eric Tschetter wrote in a 2011 blog post introducting Druid. To accomplish this, Tschetter decided that Druid would feature a parallelized, in-memory architecture that scaled out, enabling users to easily add more memory as needed.

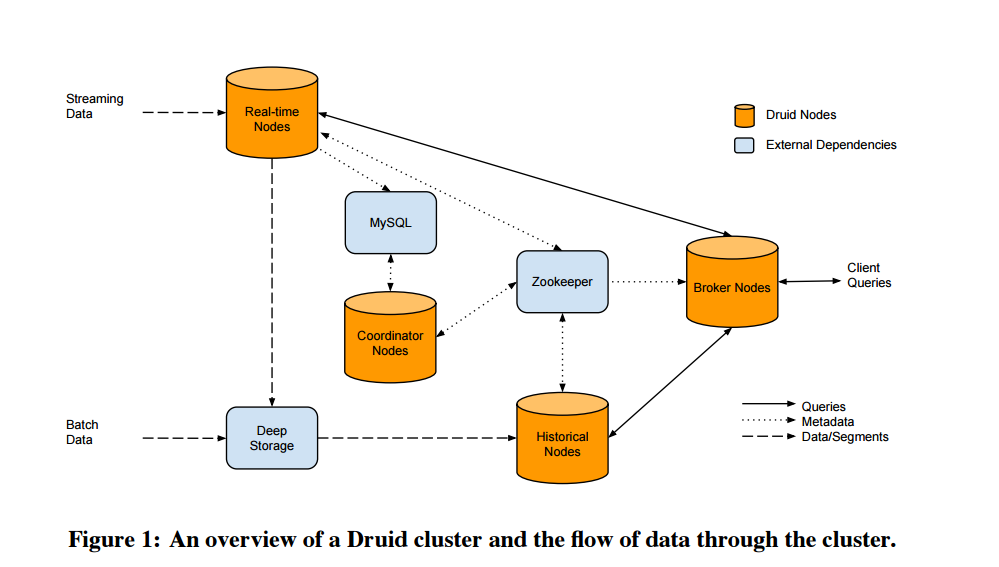

Druid essentially maps the data to memory as it arrives, compresses it into a column, and then builds indexes for each column. It also maintains two separate subsystems: a read-optimized subsystem in the historical nodes, and a write-optimized subsystem in real-time nodes (hence the name “Druid,” a shape-shifting character common in role playing games). This approach lets  the database query very large amounts of historical and real-time data, says Tschetter, who left Metamarkets to join Yahoo in late 2014.

the database query very large amounts of historical and real-time data, says Tschetter, who left Metamarkets to join Yahoo in late 2014.

“Druid’s power resides in providing users fast, arbitrarily deep exploration of large-scale transaction data,” Tschetter writes. “Queries over billions of rows, that previously took minutes or hours to run, can now be investigated directly with sub-second response times.”

Metamarkets released Druid as an open source project on GitHub in October 2012. Since then, the software has been used by a number of companies for various purposes, including as a video network monitoring, operations monitoring, and online advertising analytics platform, according to a 2014 white paper.

Netflix was one of the early companies testing Druid, but it’s unclear if it implemented it into production. One company that has adopted Druid is Yahoo, the ancestral home of Hadoop. Yahoo is now using Druid to power a variety of real-time analytic interfaces, including executive-level dashboards and customer-facing analytics, according to a post last week on the Yahoo Engineering blog.

Yahoo engineers explain Druid in this manner:

“The architecture blends traditional search infrastructure with database technologies and has parallels to other closed-source systems like Google’s Dremel, Powerdrill and Mesa. Druid excels at finding exactly what it needs to scan for a query, and was built for fast aggregations over arbitrary slice-and-diced data. Combined with its high availability characteristics, and support for multi-tenant query workloads, Druid is ideal for powering interactive, user-facing, analytic applications.”

Yahoo landed on Druid after attempting to build its data applications using various infrastructure pieces, including H adoop and Hive, relational databases, key/value stores, Spark and Shark, Impala, and many others. “The solutions each have their strengths,” Yahoo wrote, “but none of them seemed to support the full set of requirements that we had,” which included adhoc slice and dice, scaling to tens of billions of events a day, and ingestion of data in real-time.

adoop and Hive, relational databases, key/value stores, Spark and Shark, Impala, and many others. “The solutions each have their strengths,” Yahoo wrote, “but none of them seemed to support the full set of requirements that we had,” which included adhoc slice and dice, scaling to tens of billions of events a day, and ingestion of data in real-time.

Another property of Druid that caught Yahoo’s eye was its “lock-free, streaming ingestion capabilities.” The capability to work with open source big data messages busses, like Kafka, as well as working with proprietary systems, means it fits nicely into its stack, Yahoo said. “Events can be explored milliseconds after they occur while providing a single consolidated view of both real-time events and historical events that occurred years in the past,” the company writes.

As it does for all open source products that it finds useful, Yahoo is investing in Druid. For more info, see the Druid website at http://druid.io.

Related Items:

The Real-Time Future of Data According to Jay Kreps

Glimpsing Hadoop’s Real-Time Analytic Future

Druid Summons Strength in Real-Time

July 18, 2025

- Splunk Named a Leader in the Gartner Magic Quadrant for Observability Platforms for 3rd Consecutive Year

- Redgate Launches pgNow and pgCompare, Empowering Developers to Deploy and Maintain PostgreSQL Environments

- Akka Introduces Agentic AI Platform

July 17, 2025

- Galileo Announces Free Agent Reliability Platform

- Hydrolix Adds Support for Cloudflare HTTP Logs, Delivering Real-Time, Full-Fidelity Visibility at Scale

- Cloudera Secures DoD ESI Agreement to Expand AI and Data Access

- Zoho Launches Zia LLM, Introducing Prebuilt Agents, Agent Builder, MCP, and Marketplace

- Kyndryl Unveils Agentic AI Framework That Evolves to Drive Business Performance

- Ataccama Brings AI to Data Lineage to Help Business Users Understand and Trust Their Data

- GigaIO Secures $21M to Scale AI Inferencing Infrastructure Solutions

- Promethium Introduces 1st Agentic Platform Purpose-Built to Deliver Self-Service Data at AI Scale

July 16, 2025

- HERE Technologies Launches GIS Data Suite: A New Standard in Foundational GIS Data for Esri Users

- Honeycomb Announces Availability of MCP in the New AWS Marketplace AI Agents and Tools Category

- SiMa.ai to Accelerate Edge AI Adoption with Cisco for Industry 4.0

- Airbyte Data Movement Enhances Data Sovereignty and AI Readiness

- Data Squared Announces Strategic Partnership with Neo4j to Accelerate AI-Powered Insights for Government Customers

- Intel and Weizmann Institute Speed AI with Speculative Decoding Advance

- Atos Launches Atos Polaris AI Platform to Accelerate Digital Transformation with Agentic AI

July 15, 2025

- Inside the Chargeback System That Made Harvard’s Storage Sustainable

- LinkedIn Introduces Northguard, Its Replacement for Kafka

- What Are Reasoning Models and Why You Should Care

- Scaling the Knowledge Graph Behind Wikipedia

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- Iceberg Ahead! The Backbone of Modern Data Lakes

- Are Data Engineers Sleepwalking Towards AI Catastrophe?

- Databricks Takes Top Spot in Gartner DSML Platform Report

- What Is MosaicML, and Why Is Databricks Buying It For $1.3B?

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- More Features…

- Supabase’s $200M Raise Signals Big Ambitions

- Mathematica Helps Crack Zodiac Killer’s Code

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- Confluent Says ‘Au Revoir’ to Zookeeper with Launch of Confluent Platform 8.0

- Data Prep Still Dominates Data Scientists’ Time, Survey Finds

- With $17M in Funding, DataBahn Pushes AI Agents to Reinvent the Enterprise Data Pipeline

- AI Is Making Us Dumber, MIT Researchers Find

- The Top Five Data Labeling Firms According to Everest Group

- ‘The Relational Model Always Wins,’ RelationalAI CEO Says

- Toloka Expands Data Labeling Service

- More News In Brief…

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- TigerGraph Secures Strategic Investment to Advance Enterprise AI and Graph Analytics

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Promethium Introduces 1st Agentic Platform Purpose-Built to Deliver Self-Service Data at AI Scale

- Gartner Predicts 30% of Generative AI Projects Will Be Abandoned After Proof of Concept By End of 2025

- Campfire Raises $35 Million Series A Led by Accel to Build the Next-Generation AI-Driven ERP

- BigBear.ai And Palantir Announce Strategic Partnership

- Databricks Announces Data Intelligence Platform for Communications

- Code.org, in Partnership with Amazon, Launches New AI Curriculum for Grades 8-12

- Linux Foundation Launches the Agent2Agent Protocol Project

- More This Just In…