NumaQ Speeds Analytics with Massive Shared Memory Cluster

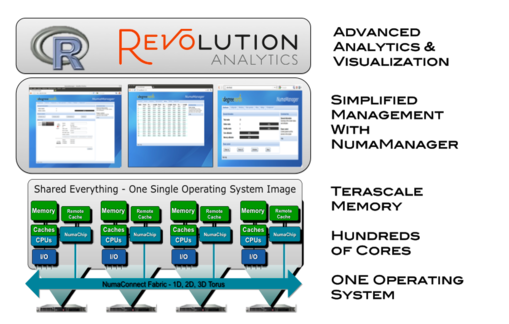

An in-memory big data analytics appliance dubbed NumaQ is said to allow up to 32-terabytes of data to be loaded and analyzed in memory, helping to eliminate a major bottleneck in data analytics performance. NumaQ, which was designed by the Singapore startup 1degreenorth and launched in 2012, uses commodity servers while leveraging a new architecture to overcome memory limits inherent in cluster computing. The company’s design strategy is based on the assumption that scaling requires hardware, and software can’t provide the necessary processing power required for big data analytics. NumaQ is an x86-based shared memory system customized for big data in-memory analytics, the company said. Based on the latest CPU from Advanced Micro Devices, it is linked via NumaConnect and a “tuned” Linux operating system. The appliance is designed to analyze and visualize big data workloads by virtualizing multiple x86-based servers into a single symmetric multiprocessing (SMP) server via Numascale’s hardware. The appliance includes an Nvidia graphics card that allows analysis of large data sets that previously required an HPC cluster. The appliance ships with R-based software from Revolution Analytics. It can also be used with SAS software. A key bottleneck in traditional architectures is the available memory, which is limited to the memory in individual servers. Regardless of the number of servers in a cluster, the largest chunk of available memory is local. That bottleneck was addressed by 1degreenorth by combining local memory to construct a large pool of global memory optimized for in-memory computing. The result is a real (rather than virtual) SMP server in which two or more processors are connected to a single shared memory. Processors have full access to I/O devices and are controlled by a single Linux operating system. The result is an architecture that allows huge volumes of data to be loaded into memory for applications like real-time analytics, data modeling, and high-performance computing. The Singapore company claims the resulting performance is up to 100 times faster than accessing data on hard disks across various cluster nodes. Among the potential big data applications are telecommunications companies seeking to sift through data generated by hundreds of millions of network users. One application would allow telcos to carry out behavioral analyses to respond to subscribers’ usage patterns and preferences. In-memory analytics could generate a report in a day that previously took a week, 1degreenorth claimed. Other data-intensive applications include life sciences. Research involving sequencing and mapping of the human genome requires analysis of huge volumes of data. In-memory analytics promises to reduce overall research cycles, the company claimed. Despite recent trend towards software-defined solutions, the company argues that NumaQ provides a level of scalability not possible with software solutions. The developer has been designing HPC clusters and grids since 1999. It currently consults for government research organizations and multinational corporations in Singapore and the Asian Pacific region. The Singapore-based company said NumaQ is available immediately in the following configurations: NumaQ-48 (48 cores, 768 GB RAM), NumaQ-96 (96 cores, 1.5 TB RAM) and NumaQ-192 (192 cores, 3 TB RAM).

The result is an architecture that allows huge volumes of data to be loaded into memory for applications like real-time analytics, data modeling, and high-performance computing. The Singapore company claims the resulting performance is up to 100 times faster than accessing data on hard disks across various cluster nodes. Among the potential big data applications are telecommunications companies seeking to sift through data generated by hundreds of millions of network users. One application would allow telcos to carry out behavioral analyses to respond to subscribers’ usage patterns and preferences. In-memory analytics could generate a report in a day that previously took a week, 1degreenorth claimed. Other data-intensive applications include life sciences. Research involving sequencing and mapping of the human genome requires analysis of huge volumes of data. In-memory analytics promises to reduce overall research cycles, the company claimed. Despite recent trend towards software-defined solutions, the company argues that NumaQ provides a level of scalability not possible with software solutions. The developer has been designing HPC clusters and grids since 1999. It currently consults for government research organizations and multinational corporations in Singapore and the Asian Pacific region. The Singapore-based company said NumaQ is available immediately in the following configurations: NumaQ-48 (48 cores, 768 GB RAM), NumaQ-96 (96 cores, 1.5 TB RAM) and NumaQ-192 (192 cores, 3 TB RAM).