Vendor » NVIDIA

Features

DDN Gooses AI Storage Pipelines with Infinia 2.0

AI’s insatiable demand for data has exposed a growing problem: storage infrastructure isn’t keeping up. From training foundation models to running real-time inference, AI workloads require high-throughput, low-latenc Read more…

What Are Reasoning Models and Why You Should Care

The meteoric rise of DeepSeek R-1 has put the spotlight on an emerging type of AI model called a reasoning model. As generative AI applications move beyond conversational interfaces, reasoning models are likely to grow i Read more…

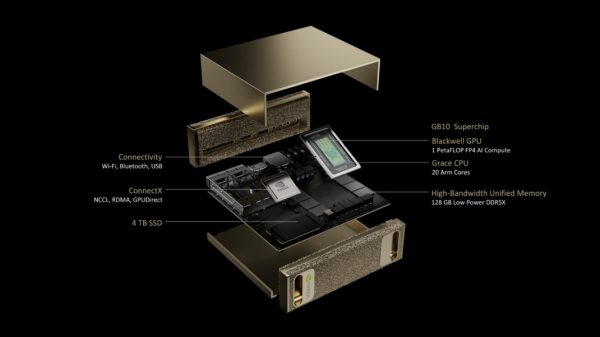

Inside Nvidia’s New Desktop AI Box, ‘Project DIGITS’

At the 2025 CES event, Nvidia announced a new $3000 desktop computer developed in collaboration with MediaTek, which is powered by a new cut-down Arm-based Grace CPU and Blackwell GPU Superchip. The new system is called Read more…

Nvidia Touts Lower ‘Time-to-First-Train’ with DGX Cloud on AWS

Customers have a lot of options when it comes to building their generative AI stacks to train, fine-tune, and run AI models. In some cases, the number of options may be overwhelming. To help simplify the decision-making Read more…

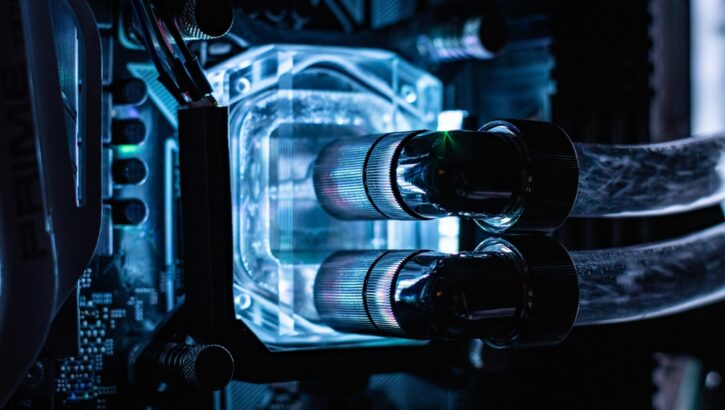

NVIDIA’s Blackwell Showcases the Future of AI Is Water-Cooled – For Now

NVIDIA’s Blackwell processor is a game changer. It is also incredibly dense and it runs hot. Apparently, this heat doesn’t become a big problem until you have a whopping 72 of the processors in a rack, but if you get Read more…

AWS Goes Big on AI with Project Rainier Super and Nova FMs

At AWS re:Invent 2024 in Las Vegas, Amazon unveiled a series of transformative AI initiatives, including the development of one of the world's largest AI supercomputers in partnership with Anthropic, the introduction of Read more…

Big Data Career Notes for November 2024

It’s that time of month again–time for Big Data Career Notes, a monthly feature where we keep you up-to-date on the latest career developments for individuals in the big data community. Whether it’s a promotion, ne Read more…

EY Experts Provide Tips for Responsible GenAI Development

Financial services businesses are eager to adopt generative AI to cut costs, grow revenues, and increase customer satisfaction, as are many organizations. However, the risks associated with GenAI are not trivial, especia Read more…

Nvidia Debuts Enterprise Reference Architectures to Build AI Factories

The advent of generative AI has supersized the appetite for GPUs and other forms of accelerated computing. To help companies scale up their accelerated compute investments in a predictable manner, GPU giant Nvidia and se Read more…

NVIDIA Is Increasingly the Secret Sauce in AI Deployments, But You Still Need Experience

I’ve been through a number of briefings from different vendors from IBM to HP, and there is one constant: they are all leaning heavily on NVIDIA for their AI services strategy. That may be a best practice, but NVIDIA d Read more…

This Just In

SAN JOSE, Calif., March 21, 2025 — NetApp has announced that NVIDIA has validated high-performance NetApp enterprise storage systems with NetApp ONTAP for environments powering AI training and inferencing. Read more…

BOSTON, March 20, 2025 — DataRobot has announced the general availability of expanded integrations with NVIDIA AI Enterprise to accelerate production-ready agentic AI applications. Cloud customers can now use DataRobot fully integrated and pre-installed with NVIDIA AI Enterprise, complete with a new gallery of NVIDIA NIM and NVIDIA NeMo framework, including the new NVIDIA Llama Nemotron Reasoning models, accelerating AI development and delivery. Read more…

SAN JOSE, Calif., March 20, 2025 — NetApp has announced it is advancing the state of the art in agentic AI with intelligent data infrastructure that taps the NVIDIA AI Data Platform reference design. Read more…

SANTA CLARA, Calif., March 20, 2025 – Quobyte has added support for ARM architecture to its software-defined storage platform. Effective immediately, customers can integrate ARM-based systems—including NVIDIA Grace, Ampere CPUs, and AWS Graviton—into new or existing x86-based Quobyte clusters. Read more…

SAN JOSE, Calif., March 20, 2025 — Supermicro, Inc. today announced a new optimized storage server for high-performance software-defined storage workloads. This first-of-its-class storage server from a major Tier 1 provider utilizes Supermicro’s system design expertise to create a high-density storage server for software-defined workloads found in AI and ML training and inferencing, analytics and enterprise storage workloads. Read more…

WEST PALM BEACH, Fla., March 20, 2025 — Vultr has announced it is an early adopter in enabling early access to the NVIDIA HGX B200. Vultr Cloud GPU, accelerated by NVIDIA HGX B200, will provide training and inference support for enterprises looking to scale AI-native applications via Vultr’s 32 cloud data center regions worldwide. Read more…

March 20, 2025 — NVIDIA this week announced the NVIDIA AI Data Platform, a customizable reference design that leading providers are using to build a new class of AI infrastructure for demanding AI inference workloads: Read more…

SAN JOSE, Calif. and CAMPBELL, Calif., March 19, 2025 — WEKA has announced it is integrating with the NVIDIA AI Data Platform reference design and has achieved NVIDIA storage certifications to provide optimized AI infrastructure for the future of agentic AI and reasoning models. Read more…

SAN FRANCISCO, March 19, 2025 — Galileo has announced an integration with NVIDIA NeMo, enabling customers to continuously improve their custom generative AI models. Now, customers can evaluate models comprehensively across the development lifecycle, curating feedback into datasets that power additional fine-tuning. Read more…

March 19, 2025 — NVIDIA has unveiled NVIDIA DGX personal AI supercomputers powered by the NVIDIA Grace Blackwell platform. DGX Spark — formerly Project DIGITS — and DGX Station, a new high-performance NVIDIA Grace Blackwell desktop supercomputer powered by the NVIDIA Blackwell Ultra platform, enable AI developers, researchers, data scientists and students to prototype, fine-tune and inference large models on desktops. Read more…