AtScale Likes Its Odds in Race to Build Universal Semantic Layer

(Oselote/Shutterstock)

Semantic layers are suddenly a hot commodity thanks to their capability to make private business data make sense to AI models. Databricks and Snowflake are both building their own semantic layers, but if broad industry support, universal applicability, and the capability to switch data lakehouse providers are the goal, then AtScale says it’s ahead of the game.

Over the past year, the capability of large language models (LLMs) to generate good quality SQL code has increased dramatically, which has spurred great interest in using LLMs as defacto data analysts. The big hope is that utilizing an LLM to convert a natural language query into SQL will enable many more people, applications, and AI agents to get access to business data, thereby achieving (finally!) the longstanding goal in the BI community of democratizing access to data.

That’s the grand plan, anyway, but there’s a few small details to work out–including the fact that the big LLMs have (hopefully) never seen your private database before and therefore have no idea what the columns, rows, tables, and views actually mean. That’s sort of a problem if accuracy is important to your board of directors.

And that’s where a semantic layer plays an important role, by functioning as a translator, if you will, between the specific way you’ve modeled your data in your database–including the particular measures, dimensions, and metrics that define your individual business–and the generic definitions that SQL query engines and AI models can read and understand.

AtScale Co-founder and CTO David Mariani watched as demand increased for the type of semantic layer that his company builds. Originally developed a dozen years ago to support AtScale’s online analytical processing (OLAP) query engine, the company’s semantic layer itself has become a big sales driver and a focus for the company. That makes the industry activity around semantic layers both good and bad, Mariani says.

“It’s like we were alone in sort of shouting from the mountaintops how important a semantic layer was, and so now the rest of the market agrees, so that’s great. You can’t be a market of one,” Mariani tells BigDATAwire. “So we’re really encouraged that other people are investing in this area. But man, they’ve got a lot of work in front of them. A lot of hard work.”

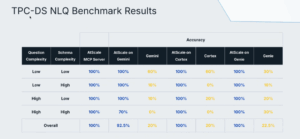

There’s no question that a semantic layer can improve the quality of AI-generated BI queries. AtScale recently conducted a test where it measured the accuracy of SQL queries generated by Google’s Gemini and Snowflake’s Cortex offerings. The first phase of the test measured their performance on the Transaction Processing Council (TPC) Data Science (DS) benchmark running as stand-alone products, and the second phase measured how they worked using the AtScale semantic layer functioning as a translator. Without the semantic layer, Gemini and Cortex query results were in the 0% to 30% accuracy range, depending on schema and question complexity. With AtScale, the scores were 100%.

Why did the scores improve so much? It’s all about understanding how data is stored in the database, which is where the complexity lives. The TPC DS benchmark simulates a retailer that sells to consumers in three manners: in-store, via the Web, and through a catalog. Sales in each of those channels is booked separately in the database, but to understand what “total sales” means, the person or application generating the SQL query needs to know which specific part of the database has the correct number to plug into the equation.

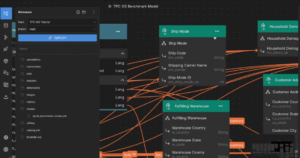

“It’s got to look through dozens of tables–and these are not all just tables, because each of these green boxes are dimensions, which itself have a model behind it,” Mariani says. “So it’s immensely complex. And so to get it right and to get it right consistently without a map–how are you going to get to the destination without a map?”

One solution would be to simply give your proprietary database to the LLM, which may eventually be able to figure it out. But most organizations are hesitant to do that for security and privacy concerns. The alternative, of course, is to sit a semantic layer in between the LLM and your database to function as the map or the translator.

The question, then, becomes which semantic layer to use. Many BI tools, like Looker, Tableau, and PowerBI, come with their own semantic layers, and datalake providers, like Snowflake and Databricks, are also building semantic layers that understand data stored on their platforms. Alternatively, customers can choose to buy an independent semantic layer that works with multiple front-end BI tools and backend databases. This is what Mariani and AtScale are building: a universal semantic layer that works with everything.

“It’s like a Rosetta Stone that allows you to plug different things into it, but it still lives within your firewall,” Mariani says. “The semantic layer is that firewall, that abstraction layer which allows them to have the independence to switch out the back end or switch out the front end. Because ultimately your business logic is the same and your presentation is the same regardless of what it’s talking to.”

AtScale isn’t the only vendor building a universal semantic layer. Last week we covered the work that its competitor, Cube, is doing. Dbt Labs is also seeking to expand from its dominant role in data transformation into semantic layers, too.

Mariani respects the work that these vendors are doing, but he also insists that AtScale’s semantic layer is more mature and is better situated to become the standard for this space, if one emerges (which is no guarantee).

LLMs struggle to make sense of complex data modeling schemes on private data (Image source: AtScale)

In 2024, the company took a step toward becoming the industry standard by open sourcing the language it uses to define metrics. Dubbed Semantic Modeling Language (SML), the language is now in the open domain. In addition to defining metrics, SML can be used to translate between other semantic layers, including support for Snowflake, dbt, PowerBI, and Looker. Mariani says its being donated to the Apache Software Foundation.

Would AtScale take the next step and open source its semantic engine, as Cube as done? That’s not in the cards at the moment, Mariani says.

“For now, no, but we’re definitely interested in establishing a common open source semantic modeling language because, we’re seeing there’s now a lot of competing languages,” he says. “We’re not the only game in town. Everybody’s gotten into it and they’re all creating their own languages. And that’s really kind of bad for the industry, I think.”

There’s one more capability in AtScale’s semantic layer that could be an ace up its sleeve: deep technical support for Microsoft’s data and analytics stack.

“The challenge to a universal semantic layer is that you have to connect to everything, and that’s where we have an advantage. Because we’re multi-dimensional, we can support the Microsoft stack through and through,” he says. “That means Excel and Power BI work natively with AtScale, just like they would work with Microsoft Analytics stack. That’s unique to us. And that’s really, really, really hard because those multidimensional languages are not meant to be translated into a tabular SQL language. And we’ve been working on that for literally 12 years. Other vendors are going to have a hard time supporting those interfaces.”

As demand for universal semantic layers picks up, vendors like AtScale will be right in the thick of it. The market hasn’t given a signal yet whether universal semantic layers will be favored, or whether customers will be satisfied with using semantic layers tied to particular BI tools or data platforms. In the meantime, greater investment in this area suggests that more innovation is on the way.

Related Items:

Beyond Words: Battle for Semantic Layer Supremacy Heats Up

AtScale Claims Text-to-SQL Breakthrough with Semantic Layer

Is the Universal Semantic Layer the Next Big Data Battleground?