MinIO Pivots to AI with Launch of AIStor

via Shutterstock

MinIO is one of the most popular open-source S3-compatible object storage systems in the world. Thanks to its combination of performance and simplicity, it’s been adopted to store data for a wide range of applications. But with the rapid emergence of generative AI, the MinIO company recognized there exists an opportunity to deliver an AI-centered object store, and the result of that recognition is today’s MinIO launch of AIStor.

MinIO founder and CEO AB Periasamy is famously reluctant to add features to the object store. “We try very hard not to add new features,” he told this publication back in 2017. “Last year we removed a considerable amount of code. We honestly try to keep it minimal.”

That minimalist approach has served MinIO very well since the company launched the object store back in November 2014. Two years ago, the company reported the project was serving more than a million Docker pulls per day and 330 million per year. At that rate, MinIO would have more than 1.5 billion downloads by now, making it one of the most popular pieces of open source software in the world.

But that was before ChatGPT landed on us like a ton of bricks in November 2022 and generative AI took off like a rocket. The GenAI revolution, quite simply, has turbo-charged companies’ appetites for big data, said MinIO Chief Marketing Officer Jonathan Symonds.

“We have multiple clients that are over exabyte in terms of data stored on MinIO, and the types of workloads that they’re running against that is totally different than in the past,” Symonds tells BigDATAwire. “So you could maybe get to an exabyte if you were a national lab and it was all in archival and most of it was on tape. But that’s not what we’re talking about here. We’re talking about AI and ML workloads on top of an exabyte of data.”

Organizations are collecting and storing on MinIO’s object store massive amounts of unstructured data for the specific purpose of using it to build and train AI models. The data could be video, log files, and telemetry data coming off of cars. It could be log files for cyber threat detection, or media for streaming services. To serve this emerging storage market, it launched the DataPod reference architecture earlier this year.

The AI use case has grown so popular and important to MinIO’s business that it forced Periasamy to re-evaluate his natural reluctance to add new features and open himself and the fast and thin object store to the twin risks of feature-creep and product-bloat. Instead of continuing to build its (not open source) Enterprise Object Store as a horizontal offering that excels at a wide range of use cases, MinIO decided to double down on AI and re-design the enterprise offering specifically around the emerging requirements for storing and accessing data for AI.

MinIO’s new promptObject API lets users query unstructured data, like restaurant recipts (Image source MinIO)

“Enterprise Object Store…was a complete data infrastructure stack, but it was still a general purpose. It’s a horizontal product,” Periasamy said. “But given how our current success rate in the customer base and the new pipeline is building, increasingly all of all of them are going towards AI and scale.”

Organizations that once felt the pains of big data management at around 100TB are now easily surpassing 100 PB, and the number of companies approaching the 1 EB barrier gets bigger every day. That’s a major change in the market for storage, and that necessitated the creation of AIStor, which is the AI-ification of MinIO’s flagship offering.

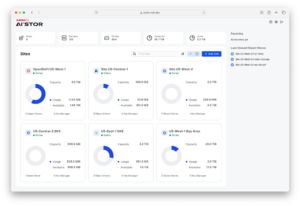

The new AIStor adds AI-specific capabilities to the object store, including a new S3-compatible API, promptObject, that allows users to “talk” to unstructured data and private repository for AI models that’s a drop in replacement for Huggingface. AIStor also adds new features that support emerging AI-data workloads, such as support for RDMA connections over S3 and a new global console that makes administration easier.

The new promptObject API will enable users to interact with their data, directly and efficiently, using natural language prompts, without requiring them to do a lot of development work around data preparation, vector databases, retrieval augmented generation (RAG), and other GenAI tools and techniques.

For instance, say a customer has an image of a restaurant menu in their object store. Using the promptObject API, a developer can ask the image to extract the physical address off the menu and return that as output. The API also supports prompt chaining, which enables the user or application to interact with multiple objects at one time, said Dil Radhakrishnan, a MinIO engineer. The API currently supports unstructured data like text, PDFs, and images, and soon will support video too, he added.

It’s a new way to query unstructured data, Perasamy said.

“In the previous generation, when the enterprise was dominated by structured data, you would type a SQL query or something like SQL,” the 2018 Datanami Person to Watch said. “In the modern world, the bulk of the enterprise data is unstructured data. And how do you deal with that data?…You’re essentially treating unstructured data as if it’s a database.”

Support for high-speed Remote Direct Memory Access (RDMA) over 400Gb and 800 Gb Ehternet networks is also important for helping to attack network bottlenecks that occur in massive storage clusters used to feed GPUs.

“The reason why RDMA is very important is now 100Gb is considered to be slow as you bring GPUs to the client side,” Periasamy said. “If you are starting a GPU infrastructure today, you should consider 400Gb as your starting point.”

Nvidia worked with Nvidia, AMD, and Intel to ensure that the RoCE (RDMA over Converged Ethernet) version 2 standard is a solid, industry-neutral interface, which is important for encouraging enterprise adoption, Periasamy said.

“We worked closely with Nvidia, AMD, and Intel to do it in a way that is compatible across all three architectures, and the S3 API still remains the S3 API,” he said. “The control channel is over HTTP, but when the data is pushed, whether from CPU to storage or GPU to storage, it’s all RDMA. And we made it S3. Instead of creating a new API specification, we kind of retain the S3 API underneath. The RDMA is transparent so you can take advantage of RDMA without understanding the complexity.”

The new AIHub, meanwhile, provides a facility for MinIO customers to store their AI models securely within their own environment. It’s a drop-in replacement for Huggingface, which is an extremely popular repository for AI models but one that is, by definition, open to the public.

“It runs inside your own four walls, and that’s got huge implications,” Symonds said. “The research we just did showed the number one concern was security and governance. And this allows you to basically have your cake and eat it too.”

This is just the start of the AI capabilities that MinIO has planned for its enterprise object store. The company sees major growth ahead in enabling customers to store and process data for AI, and is eager to build the features into its product to make that happen.

“The reason why we are we are evolving Enterprise Object Store into AIStor, to narrow its use case,” Periasamy said. “Don’t win hundreds of use cases. Win one use case that is the AI use case, and make it big. This is big enough that we don’t care about other things.”

Related Items:

MinIO Debuts DataPod, a Reference Architecture for Exascale AI Storage

GenAI Show Us What’s Most Important, MinIO Creator Says: Our Data

Solving Storage Just the Beginning for Minio CEO Periasamy