Companies that want to run traditional enterprise BI workloads but don’t want to involve a traditional data warehouse may be interested in the new Databricks SQL service that became generally available yesterday.

The Databricks SQL service, which was first unveiled in November 2020, brings the ANSI SQL standard to bear on data that’s stored in data lakes. The offering allows customers to bring their favorite query, visualizations, and dashboards via established BI tools like Tableau, PowerBI, and Looker, and run them atop data stored in data lakes on Amazon Web Services and Microsoft Azure (the company’s support for Google Cloud, which only became available 10 months ago, trails the two larger clouds).

Databricks SQL is a key component in the company’s ambition to construct a data lakehouse architecture that blends the best of data lakes, which are based on object storage systems, and traditional warehouses, including MPP-style, column-oriented relational databases.

By storing the unstructured data that’s typically used for AI projects alongside the more structured and refined data that is traditionally queried with BI tools, Databricks hopes to centralize data management processes and simplify data governance and quality enrichment tasks that so often trip up big data endeavors.

“Historically, data teams had to resort to a bifurcated architecture to run traditional BI and analytics workloads, copying subsets of the data already stored in their data lake to a legacy data warehouse,” Databricks employees wrote in a blog post yesterday on the company’s website. “Unfortunately, this led to the lock-in, high costs and complex governance inherent in proprietary architectures.”

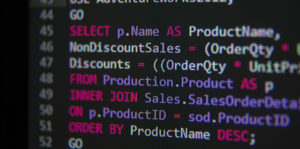

Spark SQL has been a popular open source query engine for BI workloads for many years, and it has certainly been used by Databricks in customer engagements. But Databricks SQL represents a path forward beyond Spark SQL’s roots into the world of industry standard ANSI SQL. Databricks aims to make the migration to the new query engine easy.

“We do this by switching out the default SQL dialect from Spark SQL to Standard SQL, augmenting it to add compatibility with existing data warehouses, and adding quality control for your SQL queries,” company employees wrote in a November 16 blog post announcing ANSI SQL as the default for the (then beta) Databricks SQL offering. “With the SQL standard, there are no surprises in behavior or unfamiliar syntax to look up and learn.”

With the non-standard syntax out of the way, one of the only remaining BI dragons to slay was performance. While users have been running SQL queries on data stored in object storage and S3-compatible blob stores for some time, performance has always been an issue. For the most demanding ad-hoc workloads, the conventional wisdom says, the performance and storage optimizations built into traditional column-oriented MPP databases have always delivered better response times. Even backers of data lake analytics, such as Dremio, have conceded this fact.

With Databricks SQL, the San Francisco company is attempting to smash that conventional wisdom to smithereens. Databricks released a benchmark result last month that saw the Databricks SQL service delivering 2.7x faster performance than Snowflake, with a 12x advantage in price-performance on the 100TB TPD-DS test.

“This result proves beyond any doubt that this is possible and achievable by the lakehouse architecture,” the company crowed. “Databricks has been rapidly developing full blown data warehousing capabilities directly on data lakes, bringing the best of both worlds in one data architecture dubbed the data lakehouse.”

(Snowflake, by the way, did not take that TPC-DS benchmark lying down. In a November 12 blog post titled “Industry Benchmarks and Competing with Integrity,” the company says it has avoided “engaging in benchmarking wars and making competitive performance claims divorced from real-world experiences.” The company also ran its own TPC-DS 100TB benchmark atop AWS infrastructure and–surprise!–found that its system outperformed Databricks by a significant margin. However, the results were not audited. )

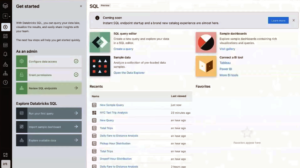

Databricks has built a full analytics experience around Databricks SQL. The service includes a Data Explorer that lets users dive into their data, including any changes to the data, which are tracked via Delta tables. It also features integration with ETL tools, such as those from Fivetran.

Users can interact with data directly through the Databricks SQL interface or use supported BI tools

Every Databricks SQL service features a SQL endpoint, which is where users can submit queries. Users are given “t-shirt” size instance choices; the workloads will also elastically scale (there is also a serverless option). Users can construct their SQL queries within the Databricks SQL interface, or work with one of Databricks’ BI partners, such as Tableau, Qlik, or TIBCO Spotfire, and have those BI tools send queries to the Databricks SQL endpoint. Users can create dashboards, visualizations, and even generate alerts based on data values specified in Databricks SQL.

While Databricks SQL has been in beta for a year, the company says it has more than 1,000 companies already using it. Among the current customers cited by Databricks are the Australian software company Atlassian, which is using Databricks SQL to deliver analytics to more than 190,000 external users; restaurant loyalty and engagement platform Punchh, which is sharing visualiations with its users via Tableau; and video game maker SEGA Europe, which migrated its traditional data warehouse to the Databricks Lakehouse.

Now that Databricks SQL is GA, the company says that “you can expect the highest level of stability, support, and enterprise-readiness from Databricks for mission-critical workloads.”

Related Items:

Databricks Unveils Data Sharing, ETL, and Governance Solutions

Will Databricks Build the First Enterprise AI Platform?

Databricks Now on Google Cloud

August 15, 2025

- SETI Institute Awards Davie Postdoctoral Fellowship for AI/ML-Driven Exoplanet Discovery

- Los Alamos Sensor Data Sheds Light on Powerful Lightning Within Clouds

- Anaconda Report Reveals Need for Stronger Governance is Slowing AI Adoption

August 14, 2025

- EDB Accelerates Enterprise AI Adoption with NVIDIA

- Oracle to Offer Google Cloud’s Gemini AI Models Through OCI Generative AI

- Grafana Labs Launches Public Preview of AI Assistant for Observability and Monitoring

- Striim Launches 5.2 with New AI Agents for Real-Time Predictive Analytics and Vector Embedding

- G42 Launches OpenAI GPT-OSS Globally on Core42’s AI Cloud

- SuperOps Launches Agentic AI Marketplace Partnering with AWS

- MinIO Launches MinIO Academy as AI Adoption Drives Demand for Object Storage Expertise

- DataBank Reports 60% of Enterprises Already Seeing AI ROI or Expect to Within 12 Months

- SnapLogic Surpasses $100M ARR as Founder Gaurav Dhillon Retires

August 13, 2025

- KIOXIA Advances AI Server Infrastructure Scalability, Accelerating Storage Performance and Density

- Qubrid AI Debuts 2-Step No-Code Platform to Chat Directly with Proprietary Data

- Redpanda Provides Kafka-Compatible Streaming for NYSE Cloud Services

- Treasure Data Introduces ‘No Compute’ Pricing, Delivering Predictable Economics with Hybrid CDP Architecture

- Couchbase: New Enterprise Analytics Brings Next-Gen JSON Analytics to Self-Managed Deployments

- Aerospike’s New Expression Indexes Simplify Developer Code and Reduce Memory Overhead for Application and AI Queries

- Progress Software Releases MarkLogic Server 12 and Highlights Results with Semantic RAG

- ScienceLogic Rolls Out Powerful AI and Automation Updates for Proactive IT Mastery

- Top 10 Big Data Technologies to Watch in the Second Half of 2025

- LinkedIn Introduces Northguard, Its Replacement for Kafka

- Rethinking Risk: The Role of Selective Retrieval in Data Lake Strategies

- Apache Sedona: Putting the ‘Where’ In Big Data

- Scaling the Knowledge Graph Behind Wikipedia

- What Are Reasoning Models and Why You Should Care

- Why Metadata Is the New Interface Between IT and AI

- Why OpenAI’s New Open Weight Models Are a Big Deal

- LakeFS Nabs $20M to Build ‘Git for Big Data’

- Doing More With Your Existing Kafka

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- Promethium Wants to Make Self Service Data Work at AI Scale

- BigDATAwire Exclusive Interview: DataPelago CEO on Launching the Spark Accelerator

- The Top Five Data Labeling Firms According to Everest Group

- Supabase’s $200M Raise Signals Big Ambitions

- McKinsey Dishes the Goods on Latest Tech Trends

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- AI Skills Are in High Demand, But AI Education Is Not Keeping Up

- Google Pushes AI Agents Into Everyday Data Tasks

- Collate Focuses on Metadata Readiness with $10M Series A Funding

- More News In Brief…

- Seagate Unveils IronWolf Pro 24TB Hard Drive for SMBs and Enterprises

- Gartner Predicts 40% of Generative AI Solutions Will Be Multimodal By 2027

- OpenText Launches Cloud Editions 25.3 with AI, Cloud, and Cybersecurity Enhancements

- StarTree Adds Real-Time Iceberg Support for AI and Customer Apps

- Gathr.ai Unveils Data Warehouse Intelligence

- Deloitte Survey Finds AI Use and Tech Investments Top Priorities for Private Companies in 2024

- LF AI & Data Foundation Hosts Vortex Project to Power High Performance Data Access for AI and Analytics

- Dell Unveils Updates to Dell AI Data Platform

- Zscaler Unveils Business Insights with Advanced Analytics for Smarter SaaS Spend and Resource Allocation

- Collibra Acquires Deasy Labs to Extend Unified Governance Platform to Unstructured Data

- More This Just In…