Raising a Platform to Meet New Verticals

Despite wide technological and industry chasms, there is a growing sense of universality when it comes to the general needs big data technologies are fulfilling. While companies in this ever-growing space tend to have their eyes on a key set of verticals, it’s often not a stretch to extend the usefulness of their offerings outside of their core markets.

A good example of this universality is embodied by Metamarkets. The company’s claim is that they can leverage the three keywords of today’s big data craze (Hadoop, cloud and in-memory) to power the events-based needs of big media, social and gaming companies at web scale.

A good example of this universality is embodied by Metamarkets. The company’s claim is that they can leverage the three keywords of today’s big data craze (Hadoop, cloud and in-memory) to power the events-based needs of big media, social and gaming companies at web scale.

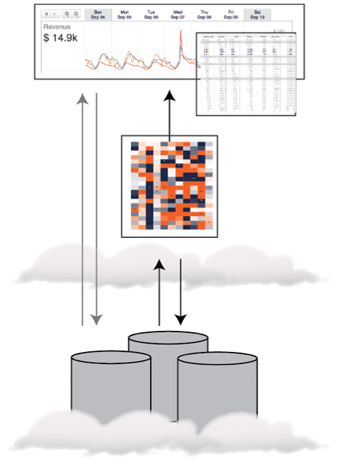

Metamarkets brings a purpose-built analytics engine to the table, which is delivered as a cloud-based service that harnesses Amazon’s platform, along with its Elastic Map Reduce, and S3. According to the company’s VP of marketing, Ken Chestnut, making us of the cloud enables his company to divert resources into the company’s in core technology (Druid comes to mind) instead of wasting cycles “reinventing the wheel” when it comes to cloud storage and computing infrastructure.

It could be a new day for once highly specific vertical-centered companies like Metamarkets that have purpose-built big data products and platforms that could find new appeal in verticals that might have once been completely out of reach. Chestnut told us this is due, in part, to a convergence of trends and needs that platforms like theirs, which were originally built to handle big web data at massive scale, were (unwittingly) made to serve.

While Chestnut wouldn’t name potential new market opportunities specifically, other than noting that they are still evaluating the full needs of other industries, he pointed to three threads that tie core industries to the outside world of enterprise big data needs, including:

- Ever-increasing volumes and sources of event-based data

- Current difficulties transforming that data into actionable insight using existing solutions

- Realization that tremendous competitive edge can be gained by shortening time to insight

Chestnut says these these challenges have become more acute for several reasons, the most important of which is that with more events and transactions moving online, there is greater opportunity to measure and quantify results in ways that are non-intrusive to end users. Accordingly, he says that companies have started “instrumenting” all aspects of their operations as a result.

He continued, noting that traditional systems were not designed to handle the volume, velocity, and variety of data that these companies are capturing. “The consequence is that data is being generated faster than it can be processed and consumed resulting in a longer time to insight (the lag time between when data is captured and when it is available for analysis).”

Chestnut noted that customers in the company’s core verticals, which include bigtime web publishing, social media and and gaming—all major event-based data-dependent verticals—all wanted the ability “slice and dice”, roll-up, drill-down on events-based data (click streams, ad impressions, user actions, etc.) by time, region, gender, and other considerations.

He said that at first, they investigated a number of relational- and NoSQL-based alternatives, but none of them achieved the speed and scale required. As a result, the company developed their own distributed, in-memory, OLAP data store, Druid.

As Chestnut told us, “To overcome performance issues typically associated with scanning tables, Druid stores data in memory. The traditional limitation with this approach, however, is that memory is limited. Therefore, we distribute data over multiple machines and parallelize queries to speed processing and handle increasing data volumes. Our customers are able to scan, filter, and aggregate billions of rows of data at ‘human time’ with the ability to trade-off performance vs cost.”

On that note, the concept of handling requests in “human time” is important to the company and plays into their strategy around Hadoop. Chestnut says that Hadoop is very complimentary to Metamarkets (and vice versa). “While Hadoop has tremendous advantages processing data at scale, it does not respond to ad-hoc queries in human time. This is where Metamarkets shines. We use Hadoop to pre-process data and prepare it for fast queries in Druid. When users log into Metamarkets, they can explore data in real-time without limits in terms of navigation or speed.”

June 20, 2025

- Couchbase to be Acquired by Haveli Investments for $1.5B

- Schneider Electric Targets AI Factory Demands with Prefab Pod and Rack Systems

- Hitachi Vantara Named Leader in GigaOm Report on AI-Optimized Storage

- H2O.ai Opens Nominations for 2025 AI 100 Awards, Honoring Most Influential Leaders in AI

June 19, 2025

- ThoughtSpot Named a Leader in the 2025 Gartner Magic Quadrant for Analytics and BI Platforms

- Sifflet Lands $18M to Scale Enterprise Data Observability Offering

- Pure Storage Introduces Enterprise Data Cloud for Storing Data at Scale

- Incorta Connect Delivers Frictionless ERP Data to Databricks Without ETL Complexity

- KIOXIA Targets AI Workloads with New CD9P Series NVMe SSDs

- Hammerspace Now Available on Oracle Cloud Marketplace

- Domino Launches Spring 2025 Release to Streamline AI Delivery and Governance

June 18, 2025

- WEKA Introduces Adaptive Mesh Storage System for Agentic AI Workloads

- Zilliz Launches Milvus Ambassador Program to Empower AI Infrastructure Advocates Worldwide

- CoreWeave and Weights & Biases Launch Integrated Tools for Scalable AI Development

- BigID Launches 1st Managed DPSM Offering for Global MSSPs and MSPs

- Starburst Named Leader and Fast Mover in GigaOm Radar for Data Lakes and Lakehouses

- StorONE Unveils ONEai for GPU-Optimized, AI-Integrated Data Storage

- Cohesity Adds Deeper MongoDB Integration for Enterprise-Grade Data Protection

- Fivetran Report Finds Enterprises Racing Toward AI Without the Data to Support It

- Datavault AI to Deploy AI-Driven Supercomputing for Biofuel Innovation

- Inside the Chargeback System That Made Harvard’s Storage Sustainable

- What Are Reasoning Models and Why You Should Care

- The GDPR: An Artificial Intelligence Killer?

- It’s Snowflake Vs. Databricks in Dueling Big Data Conferences

- Databricks Takes Top Spot in Gartner DSML Platform Report

- Snowflake Widens Analytics and AI Reach at Summit 25

- Why Snowflake Bought Crunchy Data

- Top-Down or Bottom-Up Data Model Design: Which is Best?

- Change to Apache Iceberg Could Streamline Queries, Open Data

- Fine-Tuning LLM Performance: How Knowledge Graphs Can Help Avoid Missteps

- More Features…

- Mathematica Helps Crack Zodiac Killer’s Code

- It’s Official: Informatica Agrees to Be Bought by Salesforce for $8 Billion

- Solidigm Celebrates World’s Largest SSD with ‘122 Day’

- AI Agents To Drive Scientific Discovery Within a Year, Altman Predicts

- DuckLake Makes a Splash in the Lakehouse Stack – But Can It Break Through?

- The Top Five Data Labeling Firms According to Everest Group

- ‘The Relational Model Always Wins,’ RelationalAI CEO Says

- Who Is AI Inference Pipeline Builder Chalk?

- Toloka Expands Data Labeling Service

- Data Prep Still Dominates Data Scientists’ Time, Survey Finds

- More News In Brief…

- Astronomer Unveils New Capabilities in Astro to Streamline Enterprise Data Orchestration

- Yandex Releases World’s Largest Event Dataset for Advancing Recommender Systems

- Astronomer Introduces Astro Observe to Provide Unified Full-Stack Data Orchestration and Observability

- BigID Reports Majority of Enterprises Lack AI Risk Visibility in 2025

- Databricks Unveils Databricks One: A New Way to Bring AI to Every Corner of the Business

- MariaDB Expands Enterprise Platform with Galera Cluster Acquisition

- FICO Announces New Strategic Collaboration Agreement with AWS

- Snowflake Openflow Unlocks Full Data Interoperability, Accelerating Data Movement for AI Innovation

- Databricks Announces Data Intelligence Platform for Communications

- Cisco: Agentic AI Poised to Handle 68% of Customer Service by 2028

- More This Just In…