SuperAnnotate and the Quest for Superior AI Training Data

(ESB-Professional/Shutterstock)

If data is the source of AI, then it follows that the best data creates the best AI. But where does one find ultra high-quality data? According to the folks at SuperAnnotate, that type of data does not exist naturally. Instead, you must create it by enriching your existing digital stock, which is the goal of the company and its product.

As its name suggests, SuperAnnotate is in the business of data annotation, or data labeling. That could include putting bounding boxes around humans in a computer vision use cases, or identifying the tone of a conversation in a natural language processing (NLP) use case. But data annotation is only just the beginning for SuperAnnotate, which helps automate additional data tasks that are needed to create training data of the highest quality.

“We start from data labeling but then we kind of grow and centralize a bunch of other data operations related to training data,” says SuperAnnotate Co-founder and CEO Vahan Petrosyan. “The focus is still the training data. But people stay in our platform because we manage that data well afterwards.”

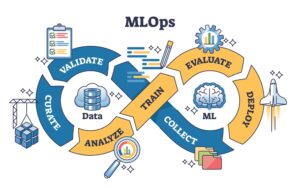

For instance, in addition to labeling and annotation, the SuperAnnotate product helps data engineers and data scientists explore data using visualization tools, build CI/CD data orchestration pipelines for training data, generate synthetic data, and evaluate how AI models perform with certain data sets. It helps to automate machine learning operations, or MLOps.

“The big value that we have is that we give you a bunch of different tools to create a small subset of highly curated, highly accurate data set to improve massively your model performance,” Petrosyan says.

Curating Quality Data

Vahan Petrosyan co-founded SuperAnnotate in 2018 with his brother, Tigran Petrosyan. The Armenian brothers were both PhD candidates at European universities, with Vahan studying machine learning at the KTH Royal Institute of Technology in Sweden and Tigran studying physics at the University of Bern in Switzerland.

Vahan was developing a machine learning technique at university that leveraged “super pixels” for computer vision. Instead of continuing with his degree, he decided to use the super pixel discovery as the basis for a company, dubbed SuperAnnotate, which they co-founded with two other engineers, Jason Liang and Davit Badalyan.

In January 2019, SuperAnnotate joined UC Berkeley’s SkyDeck accelerator program, and moves its headquarters to Silicon Valley. After launching its first data annotation product in 2020, it raised more than $17 million over the next year and a half.

It concentrated its efforts on integration its data annotation platform with major data platforms, such as Databricks, Snowflake, AWS, GCP, and Microsft Azure, to allow direct integration with the data.

When the generative AI revolution hit in late 2022, SuperAnnotate adopted its software to assist with fine-tuning of large language models (LLMs). Its been widely adopted by some fairly large companies, including Nvidia, which was impressed enough with the product that it decided to become an investor with the November 20204 Series B round that raised $36 million.

‘Evals Are All You Need’

One of the secrets to creating better data for AI models–or what Petrosyan calls “super data”–is having a well-defined and controlled evaluation process. The eval process, in turn, is critical to improving AI performance over time using reinforcement learning through human feedback (RLHF).

One of the most effective eval techniques involves creating highly detailed question-answer pairs, Petrosyan says. These question-answer pairs instruct how the human data labelers and annotators should label and annotate the data to create the type of AI that is desired.

“Humans should collaborate with AI, at least to evaluate the synthetic data that is being generated, to evaluate the question-answer pairs that are being written,” Petrosyan tells BigDATAwire. “And that data is becoming more or less the super data that we’re discussing.”

By guiding how the data labeling and annotation is done, the question-answer pairs allow organizations to fine-tune the behavior of black box AI models, without changing any weights or parameters in the AI model itself. These question-answer pairs can range in length from a couple of pages to up to 60 pages, and are critical for addressing edge cases.

“If you’re Ford and you’re deploying your chatbot, it shouldn’t really say that Tesla is a better car than Ford,” Petrosyan says. “And some chatbots will say that. But you have to control all of that by just providing examples, or labeling two different answers, that this is the way that I prefer it to be answered compared to this other way, which says Tesla is a better car than Ford.”

The eval step is a critical but undervalued function in AI, Petrosyan says. The OpenAI’s of the world understand how valuable it can be to keep feeding your AI with good, clean examples of how you want the AI to behave, but many other players are missing out on this important step.

“If you’re not very clear, there are tons of edge cases that are appearing and they’re producing a worse quality data as a result,” he says. “One of the co-founders of OpenAI [President Greg Brockman] said evals are all you need to improve the LLM model.”

SuperAnnotate’s goals is to help customers create better data for AI, not more data. Data volume is not a good replacement for data quality.

“Every small, tiny device is collecting so much data that it’s almost not useful data,” Petrosyan says. “But how do you create intelligent data? That super data is going to be your next oil.”

Related Items:

Data At More Than Half Of Companies Will Not Be AI-Ready By The End of 2024

To Prevent Generative AI Hallucinations and Bias, Integrate Checks and Balances

The Top Five Data Labeling Firms According to Everest Group